./articles/ GenAI and LLM Questions with In-Depth Answers

The rapid advancements in Generative AI and Large Language Models (LLMs) have brought transformative changes to industries ranging from customer support to creative content generation. As organizations and professionals dive into this exciting domain, understanding the key concepts and gaining practical knowledge is crucial.

This guide serves as a comprehensive resource for anyone preparing for interviews or aiming to deepen their expertise in LLMs. Each topic is composed of a wide range of questions, carefully curated to cover foundational concepts, practical applications, and advanced topics. The questions in this guide are derived from the repository LLM Interview Questions, a community-driven resource dedicated to fostering knowledge and preparation in the field of LLMs.

The questions tackle diverse areas such as Prompt Engineering and Basics of LLM, exploring foundational principles like understanding predictive vs. generative AI, key concepts in language model training, and decoding strategies, as well as advanced aspects like in-context learning and strategies to optimize prompt writing for enhanced model performance. These topics highlight not only theoretical knowledge but also practical approaches to improving the reasoning and utility of LLMs across varied use cases.

Quick Navigation

1. Basics of LLM

1.1 What is the difference between Predictive/Discriminative AI and Generative AI?▼

Predictive AI, also known as discriminative AI, focuses on predicting the output based on input data. It aims to learn the relationship between input features and output labels, making predictions based on patterns in the training data. Common examples of predictive AI include classification and regression tasks, where the model predicts discrete classes or continuous values, respectively.

Core Characteristics:

- Focuses on learning \(P(y|x)\) - the probability of output y given input x

- Optimized for accuracy in classification and prediction tasks

- Efficient in resource usage compared to generative models

- Typically requires less training data for good performance

- Better suited for tasks with clear decision boundaries

Key Technical Aspects of Predictive AI you must known:

- Feature Engineering: The process of manually or automatically deriving meaningful features from raw data to improve model performance.

- Loss Functions: Metrics used to quantify prediction errors, including cross-entropy, hinge loss, and mean squared error (MSE).

- Optimization: Techniques such as gradient descent and stochastic optimization to minimize the loss function during training.

- Regularization: Methods like L1/L2 regularization, dropout, and batch normalization to prevent overfitting and improve generalization.

Generative AI, on the other hand, is designed to generate new data that resembles the training data distribution. Instead of predicting a specific output, generative models learn the underlying structure of the data and generate new samples that are similar to the training examples. These models can create new images, text, audio, or other types of data based on the patterns they have learned during training.

Core Characteristics:

- Models the complete joint probability distribution \(P(x,y)\)

- Creates novel, coherent outputs across various domains

- Can handle multiple tasks including content generation and understanding

- Requires larger datasets and computational resources

- Capable of unsupervised and self-supervised learning

Key Examples of GenAI Architectures to know:

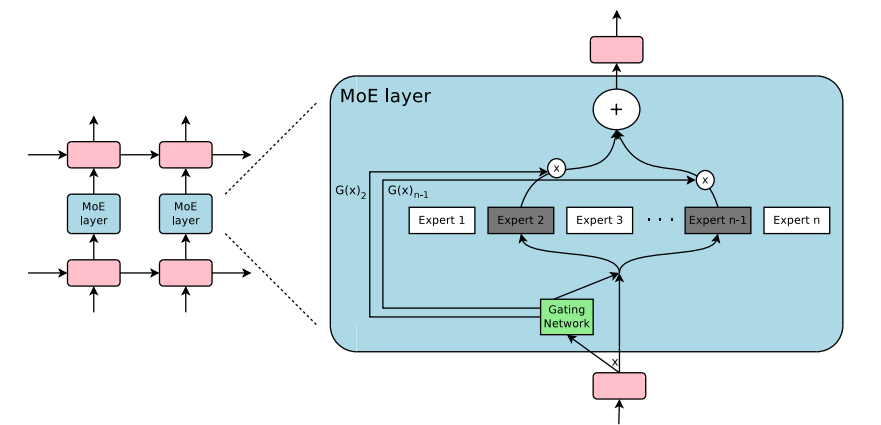

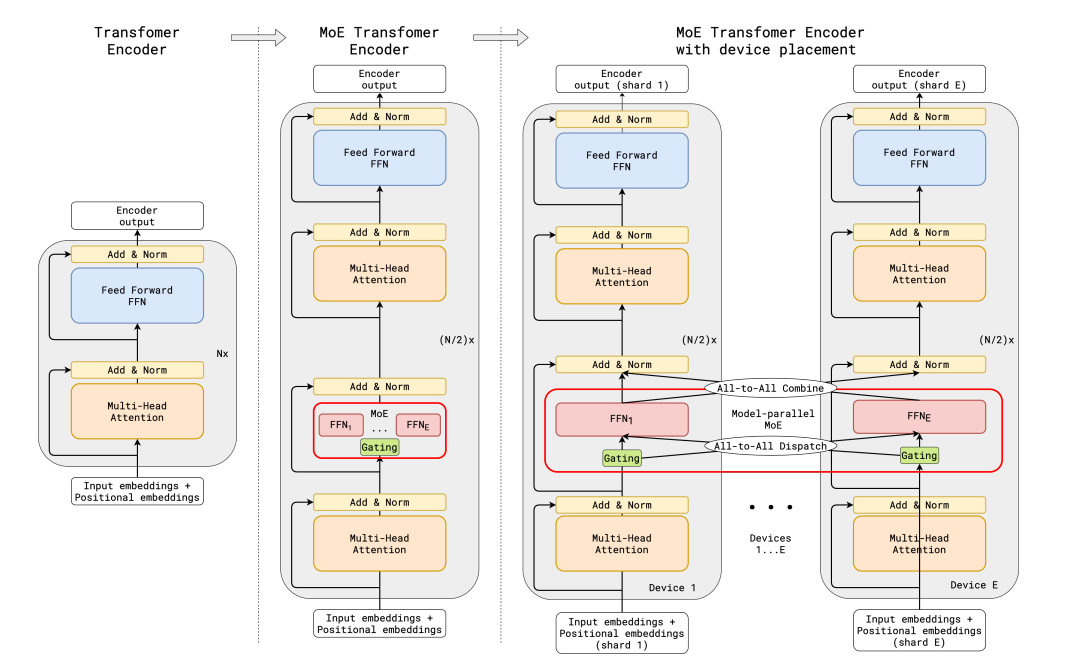

- Transformer Models: Use self-attention mechanisms, which allow the model to weigh the importance of different words in a sentence, and multi-head attention, which enables the model to focus on different parts of the sentence simultaneously for better context understanding.

- Generative Adversarial Networks (GANs): Utilize a generator, which creates data samples, and a discriminator, which evaluates them, in a competitive framework to create realistic data samples.

- Diffusion Models: Use denoising processes, which iteratively refine noisy data to generate high-quality outputs, and U-Net architectures, which are convolutional neural networks designed for image segmentation and generation.

- Variational Autoencoders (VAEs): Focus on latent space modeling using the reparameterization trick, which allows for backpropagation through stochastic variables by introducing a differentiable approximation, and minimize reconstruction loss for generative tasks.

1.2 What is LLM, and how are LLMs trained?▼

LLM (Large Language Model) is an AI system trained to understand and generate human-like text by processing vast amounts of textual data. These models represent the cutting edge of natural language processing technology.

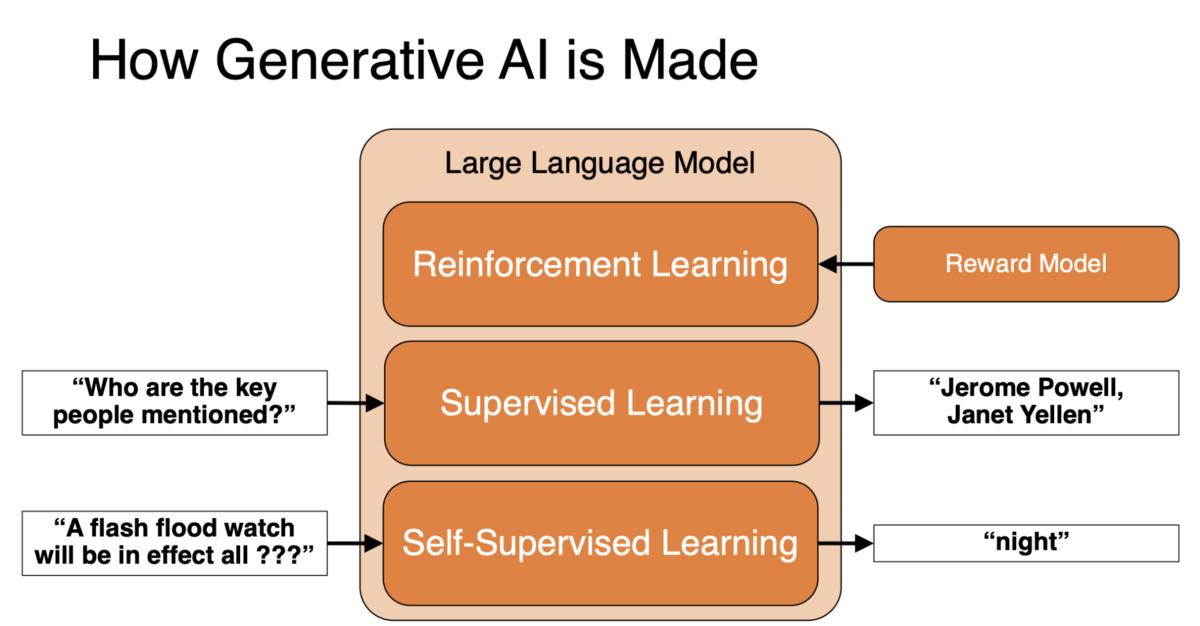

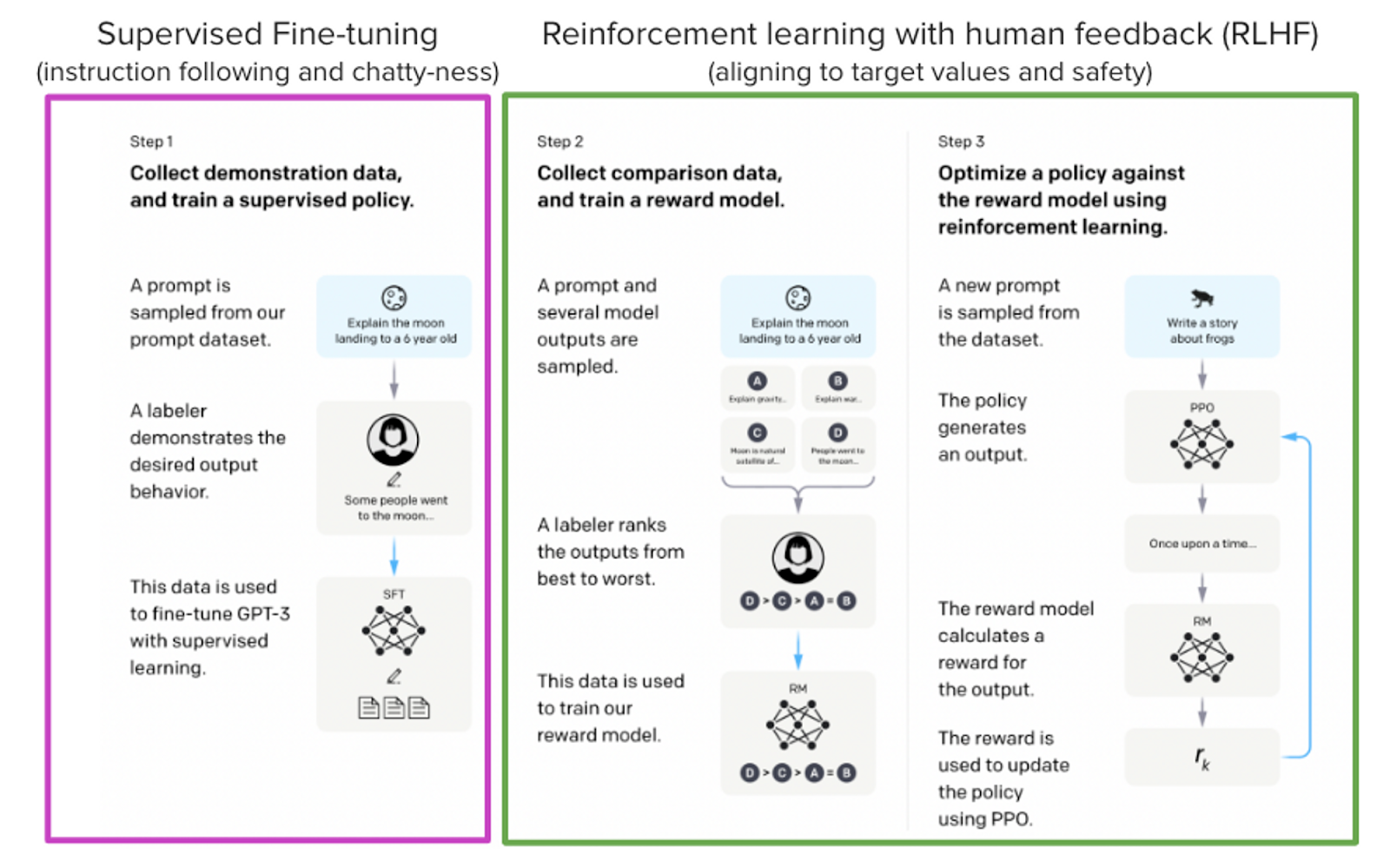

Training large language models is a multi-layered stack of processes, each playing a unique role in shaping the model's performance. The three main phases are:

- Self-supervised learning

- Supervised learning

- Reinforcement learning

Phase 1: Self-Supervised Learning for Language Understanding

Self-supervised learning, the first phase of training, is typically what comes to mind when discussing language modeling. This process involves exposing the model to vast amounts of unannotated or raw data and instructing it to predict the ‘missing’ elements within that data. Through this, the model learns about both language and the underlying domain to generate plausible responses.

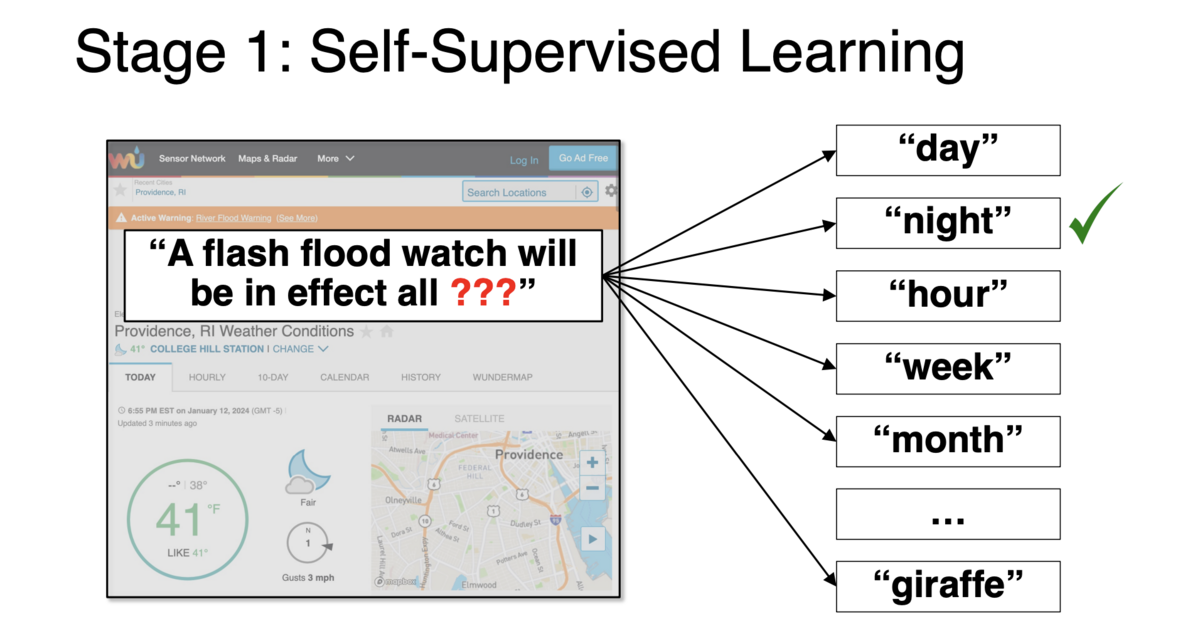

For instance, if we provide the model with text from a weather website and ask it to predict the next word, the model must comprehend the language and the context of the weather domain. In my presentation, I used the example: “A flash flood watch will be in effect all _____.” At an intermediate stage, the model ranks possible predictions, from the most likely answers (“day,” “night,” “hour”) to those that are less probable (“month”), and even to nonsensical ones (“giraffe”) that receive low probability scores.

This process is called self-supervision (distinct from unsupervised learning) because there is a specific, correct answer—the word that appeared in the collected text, which in this case was “night.” While self-supervision shares similarities with unsupervised learning, it is distinct in that it focuses on predicting specific correct outcomes, even within the context of abundant, unannotated data.

Phase 2: Supervised Learning for Instruction Understanding

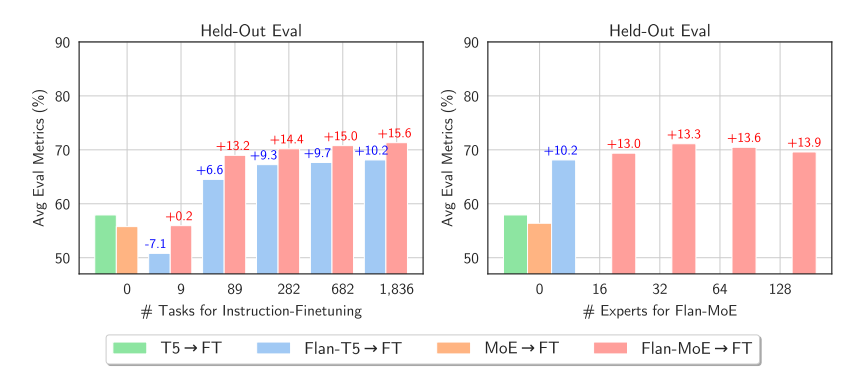

Supervised learning, or instruction tuning, marks the second stage in the training process of large language models (LLMs). This phase is vital, building upon the foundational knowledge established during the self-supervised learning phase. In this phase, the model is trained specifically to follow instructions. Unlike self-supervised learning, which focuses on predicting words and completing sentences, instruction tuning teaches the model to understand and respond to explicit user requests. This shift makes the model significantly more interactive and useful in real-world applications. The impact of instruction tuning on enhancing LLM capabilities has been demonstrated through numerous studies, including those led by Snorkel researchers. One key outcome was that models trained with instruction tuning performed better at generalizing to new, unseen tasks. This is a major achievement, as the ability to effectively handle unfamiliar tasks is a central goal of machine learning models. Given its proven success, instruction tuning has become a standard practice in LLM training. Once this phase is completed, the model is no longer just predicting the next word—it’s trained to engage with users, comprehend their instructions, and provide meaningful, context-aware responses.

Phase 3: Reinforcement Learning to Promote Desired Behavior

The final stage in training large language models (LLMs) is reinforcement learning, a critical process that fine-tunes the model by encouraging desired behaviors and discouraging undesirable outputs. Unlike earlier stages, this phase doesn’t provide the model with specific target outputs but instead evaluates and “grades” the responses the model generates.

Although reinforcement learning has a long history in machine learning, its application to LLM training was first proposed by OpenAI after the advent of instruction tuning. The process begins with a model already capable of understanding instructions and generating coherent language. Human annotations are then used to compare model outputs, identifying which responses are better or worse. These comparisons help guide the creation of a reward model, which assigns quantitative scores to the quality of the outputs. The reward model plays a pivotal role by scaling feedback to the model, steering it toward generating preferred responses while penalizing undesired ones. This method is particularly effective for fostering nuanced behaviors, such as encouraging brevity or discouraging harmful or inappropriate language. As a result, the model learns to produce higher-quality, context-sensitive responses.

This process, known as reinforcement learning with human feedback (RLHF), underscores the value of human involvement in shaping the model's behavior. By incorporating human preferences into the training loop, RLHF ensures the model aligns more closely with user expectations and ethical standards, delivering a safer and more user-centric experience.

1.3 What is a token in the language model?▼

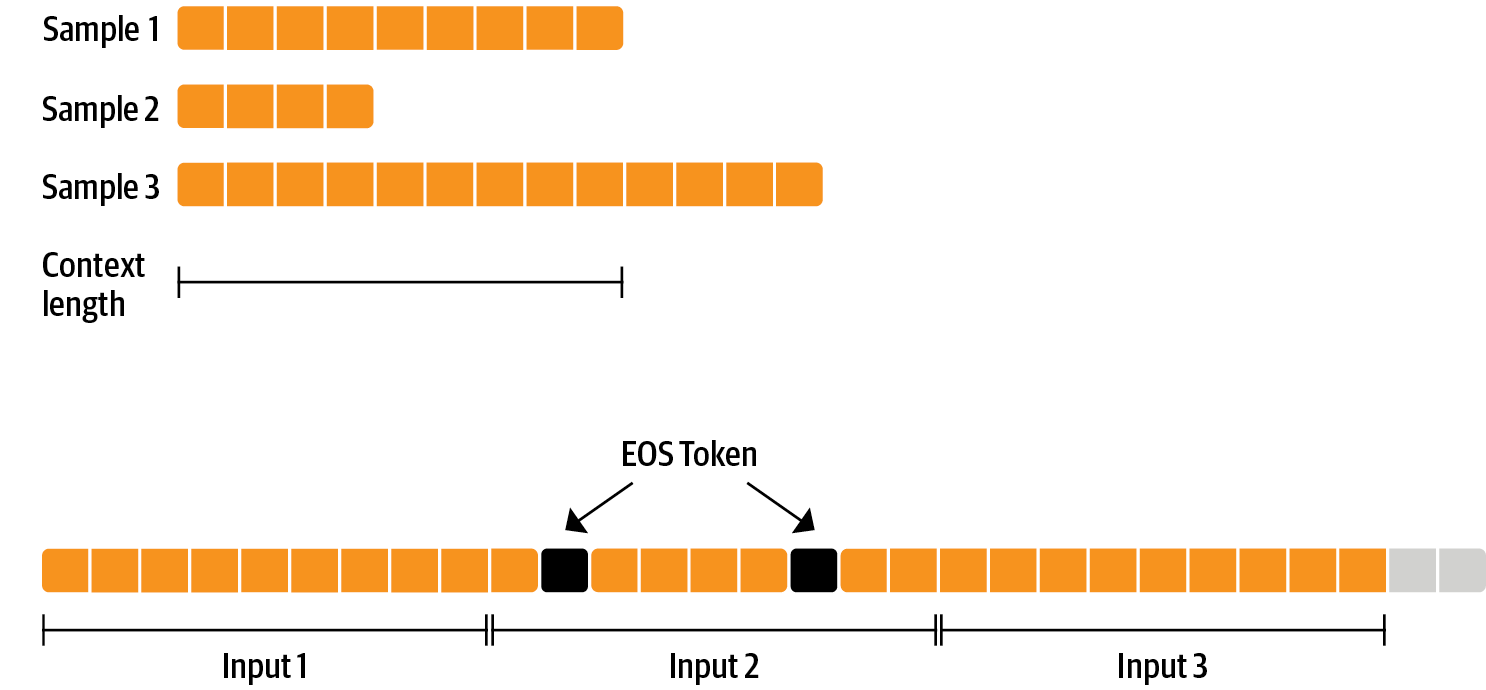

In the context of language models, a token refers to the smallest unit of text that the model processes. Tokens can represent individual words, subwords, or characters, depending on the tokenization strategy used. The choice of tokenization impacts the model's ability to understand and generate text.

Each language model has a context window—a limit on the number of tokens it can handle at a time. This context window defines how much text the model can "remember" and process simultaneously. For example, a model with a 4,000-token context window can work with approximately 3,000–4,000 words of text at once, depending on the complexity of the language and the average token length.

The vector representation of a token is called an embedding. These vectors capture the meaning, context, and relationships between tokens in a form that the model can compute.

1.4 How to estimate the cost of running SaaS-based and Open Source LLM models?▼

These are subscription-based or pay-per-use services that provide hosted LLMs. SaaS-based LLM services charge based on processed tokens (both input and output). For example, GPT-4 charges $0.03 for input tokens and $0.06 for output tokens per 1,000 tokens. The total cost can be calculated by multiplying the sum of input and output tokens by the cost per token.

Total Cost = (Tokens Input + Tokens Output) × Cost Per Token

Other costs include compute tier options (with different pricing for different models), monthly subscription fees for premium access, and possible additional charges for reserved capacity to ensure low latency or scalability.

With SaaS, there’s no need for infrastructure setup or maintenance. The service scales automatically, making it ideal for prototypes or low-volume usage.

On the other hand, Open Source LLM models are free to use but require infrastructure setup and maintenance. For self-hosted open-source models, compute costs can vary widely depending on the choice of infrastructure. Cloud-based GPU instances range from $1 to $6 per hour per GPU, with an average cost for an NVIDIA A100 instance being around $2.5 per hour. The compute cost is calculated by multiplying the instance cost by the number of hours used.

Cost (Compute) = (Instance Cost) × (Hours of Use)

Open-source LLMs also incur storage and networking costs (e.g., 100GB+ for large models), energy costs for continuous operation, personnel costs for engineering and maintenance, and possible software licensing fees for supporting software.

Open source LLMs offer full control over the model and infrastructure. They can be customized for specific needs and may incur lower long-term costs for high-volume usage.

Comparison table

| Factor | SaaS-Based | Open-Source |

|---|---|---|

| Ease of Setup | Instant, minimal configuration | Significant setup and maintenance |

| Scalability | Automatic, scales with need | Manual provisioning required |

| Costs for Low Usage | Lower for prototypes | Higher initial setup cost |

| Costs for High Usage | Scales linearly, can be expensive | More cost-effective long-term |

| Customizability | Limited by vendor | Full control |

| Operational Control | Relies on vendor | Complete independence |

1.5 Explain the Temperature parameter and how to set it.▼

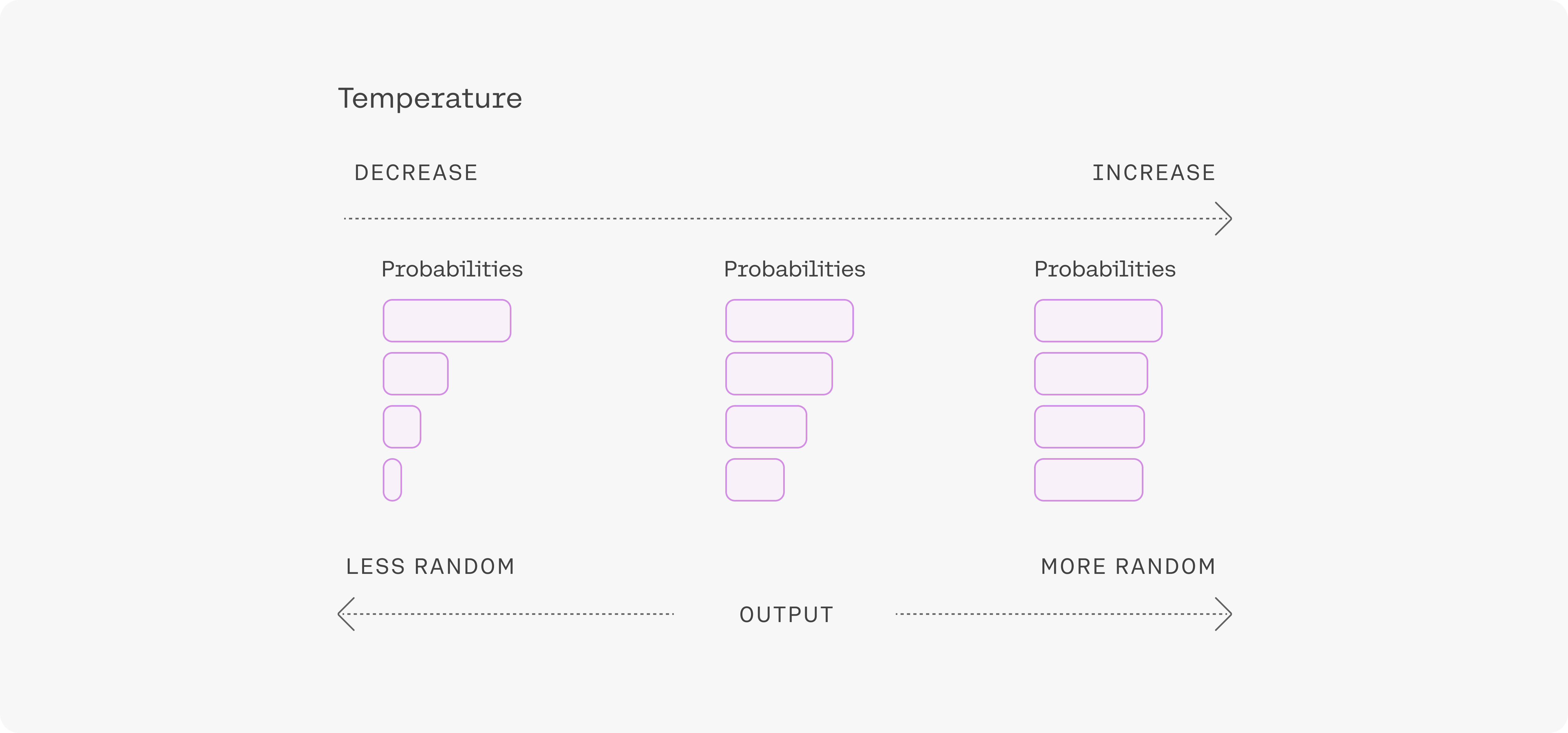

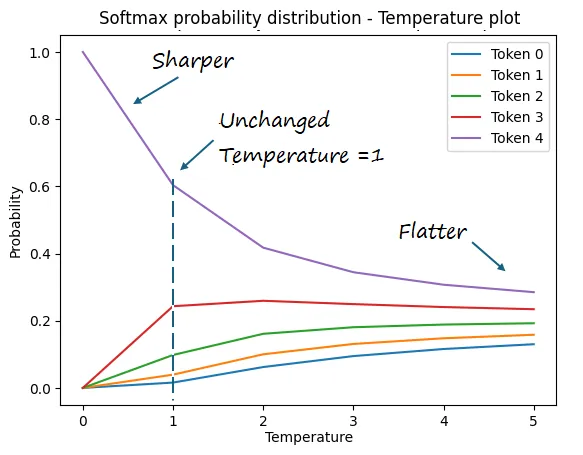

The Temperature parameter is used in language models to control the level of randomness in the text generation process. It is particularly useful for fine-tuning the creativity and variability of the model’s responses.

In simple terms, the temperature adjusts the "steepness" of the probability distribution over possible next words or tokens. When generating text, a model selects tokens based on a distribution of probabilities. A lower temperature (e.g., 0.1) makes the model more deterministic, meaning it is more likely to select the most probable next token, leading to more predictable and coherent text. A higher temperature (e.g., 1.0) adds more randomness to the selection, allowing for more creative or unexpected outputs.

The temperature parameter works by modifying the logits (raw scores) of the possible next tokens before converting them to probabilities using the softmax function. The formula for calculating the probability distribution for the next token given the logits is:

\[P(w_i) = exp(logit(w_i) / T) / Σ exp(logit(w_j) / T) \]

Here, \(w_i\) refers to the ith token in the vocabulary, and \(logit(w_i)\) is the unscaled score for that token. The parameter T is the temperature:

- T = 0: This means maximum determinism. The model always selects the most probable token (argmax sampling). However, most implementations prevent using an exact 0 since it removes any stochasticity altogether.

- T between 0 and 1: Sharper probability distributions, making high-probability tokens even more dominant. Lower values reduce randomness and make the output more focused and predictable.

- T = 1: No adjustment to the logits. The original probability distribution is used.

- T > 1: Flattens the probability distribution, increasing randomness. Less probable tokens become more likely to be chosen, producing creative or exploratory outputs.

The temperature parameter is often adjusted through an API or in the model configuration. If you are interacting with an API, you will usually specify the temperature in the request. Here's a typical API call setting:

{

"model": "gpt-4",

"temperature": 0.7,

"prompt": "Describe a sunset over a mountain range."

}

Experimenting with different temperature settings can help achieve the desired tone and level of creativity in the responses. Lower temperatures are appropriate for factual, straightforward tasks, while higher temperatures are useful for generating new ideas, brainstorming, or creative writing.

1.6 What are different decoding strategies for picking output tokens?▼

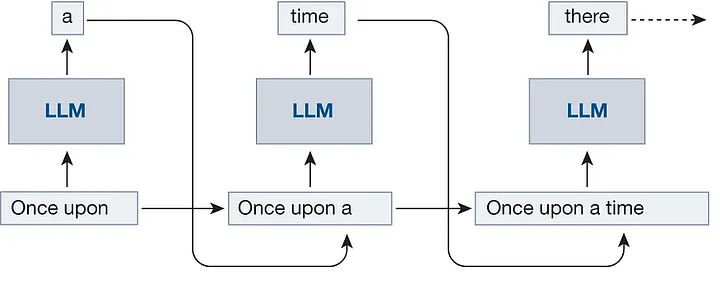

Language models are pre-trained to predict the next token in a text corpus. Decoding strategies determine how to select the next token based on the probability distribution over a fixed vocabulary. The process of selecting these tokens, known as decoding, plays a crucial role in shaping the output text. By tailoring the decoding approach, you can customize text generation to suit specific needs. Depending on the decoding method, the model may choose the most probable token, consider multiple top candidates, or introduce randomness for variety.

An effective decoding strategy transforms a language model from a simple next-token predictor into a powerful text generator capable of handling diverse tasks. This raises two key questions: "What are the different decoding strategies?" and "How do they influence the output generated by language models?".

The simplest way to implement a sampling function is to select the next token with the highest probability at each step, an approach known as Greedy Search. This straightforward method is fast and efficient because it prioritizes the most probable token every time. However, this predictability often results in repetitive or unoriginal text, making it unsuitable for generating creative content. Can this be improved? Absolutely.

Beam search offers an enhancement by maintaining a beam of the K most probable sequences at each time step, where K is the beam width. The process continues until a maximum sequence length is reached or an end-of-sequence token is generated. Beam search typically results in higher-quality text than greedy search, but it requires more computational effort.

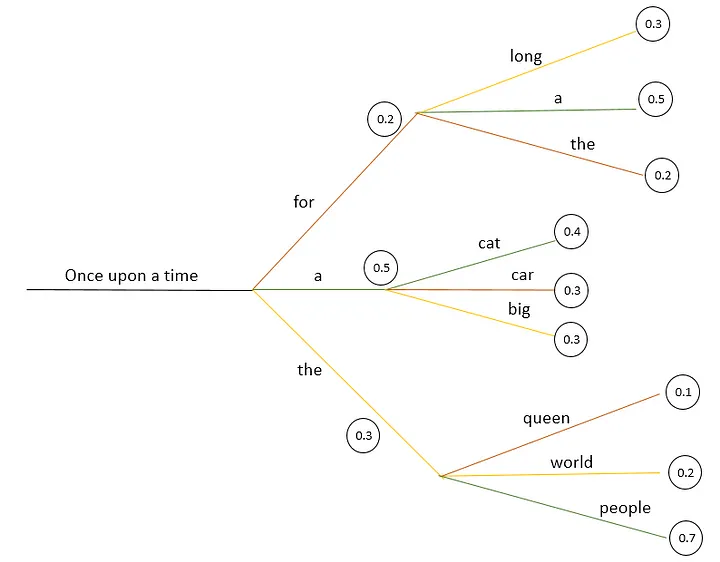

Suppose the initial sequence is "Once upon a time" and K=2.

- The two most likely tokens are "a" and "the".

-

In the next step:

-

The sequence ("a", "cat") has a probability of

0.20 (

0.5 × 0.4). -

The sequence ("the", "people") has a probability of

0.21 (

0.3 × 0.7).

-

The sequence ("a", "cat") has a probability of

0.20 (

- Beam search selects the sequence "the people" as it has the higher cumulative probability.

The above methods that choose the most probable next token at each step are called Deterministic methods. These methods produce output text ensuring predictability but often at the expense of diversity. So if you are looking for creative writing, we need some better mechanisms.

To overcome the limitations of deterministic methods in generating varied and creative text, stochastic methods introduce randomness into the selection process. These methods reduce predictability, creating outputs that are less repetitive and more diverse.

When generating text, stochastic methods often rely on strategies that prioritize tokens with higher probabilities while maintaining a degree of randomness. Popular approaches for achieving this balance are ramdom sampling, temperature sampling, Top-k sampling and Top-p sampling, each offering unique mechanisms for selecting the next token.

The simplest stochastic approach is random sampling, where the next token is sampled from the probability distribution:

def sample(p):

return np.random.choice(np.arange(p.shape[-1]), p=p)

With this method, each execution produces different outputs. However, while less predictable, this approach may result in incoherent text. To achieve varied yet coherent results, we need more refined strategies.

Temperature sampling, as discuss earlier, adjusts the likelihood of selecting tokens by altering the temperature of the softmax function, which transforms the model’s logits into probabilities.

Consider tokens with logits [1, 2, 3, 4, 5]. The following code plots the temperature-controlled softmax probability distribution:

from matplotlib import pyplot

import torch

depth = range(6)

logits = torch.tensor([1, 2, 3, 4, 5])

prob_list = []

for temperature in depth:

prob = torch.nn.functional.softmax(logits / (temperature + 0.1), dim=-1)

prob_list.append(prob.numpy())

pyplot.plot(depth, prob_list)

pyplot.xlabel("Temperature")

pyplot.ylabel("Probability")

pyplot.title("Temperature-Controlled Softmax Distribution")

pyplot.show()

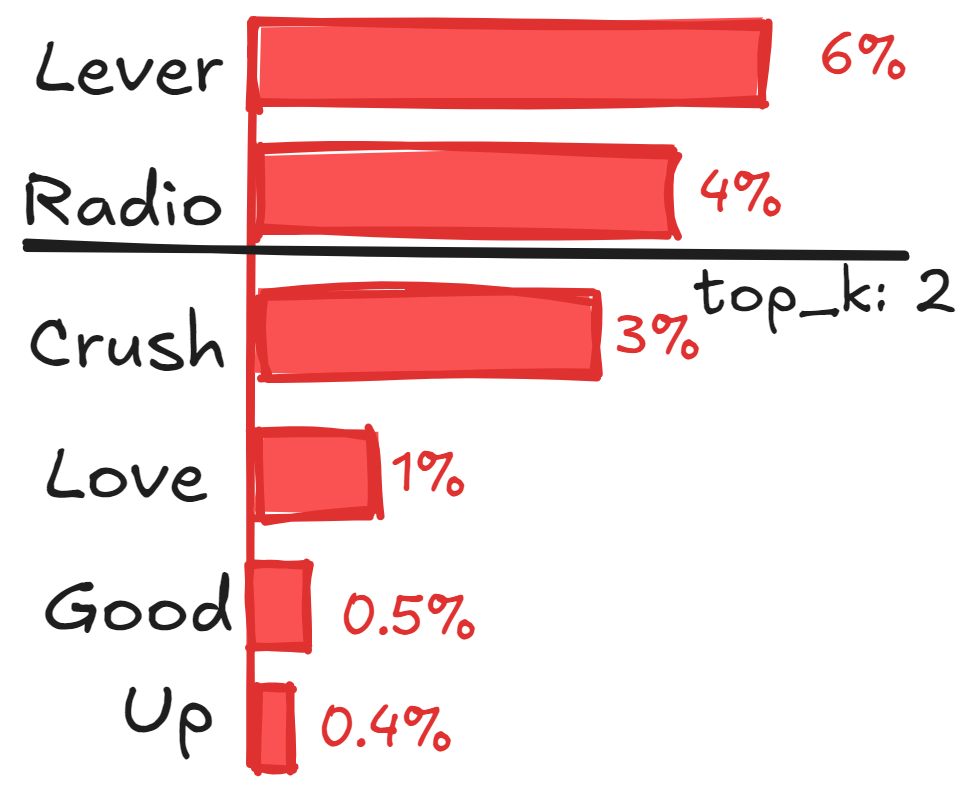

Top-k sampling limits selection to the k most probable tokens. For instance, if k=2 and token probabilities are:

\( P(T_0) = 0.6, P(T_1) = 0.4, P(T_2) = 0.3, P(T_3) = 0.1, P(T_4) = 0.05, P(T_5) = 0.04 \)

The tokens \( T_3, T_4, T_5 \) are excluded, and the remaining probabilities are redistributed.

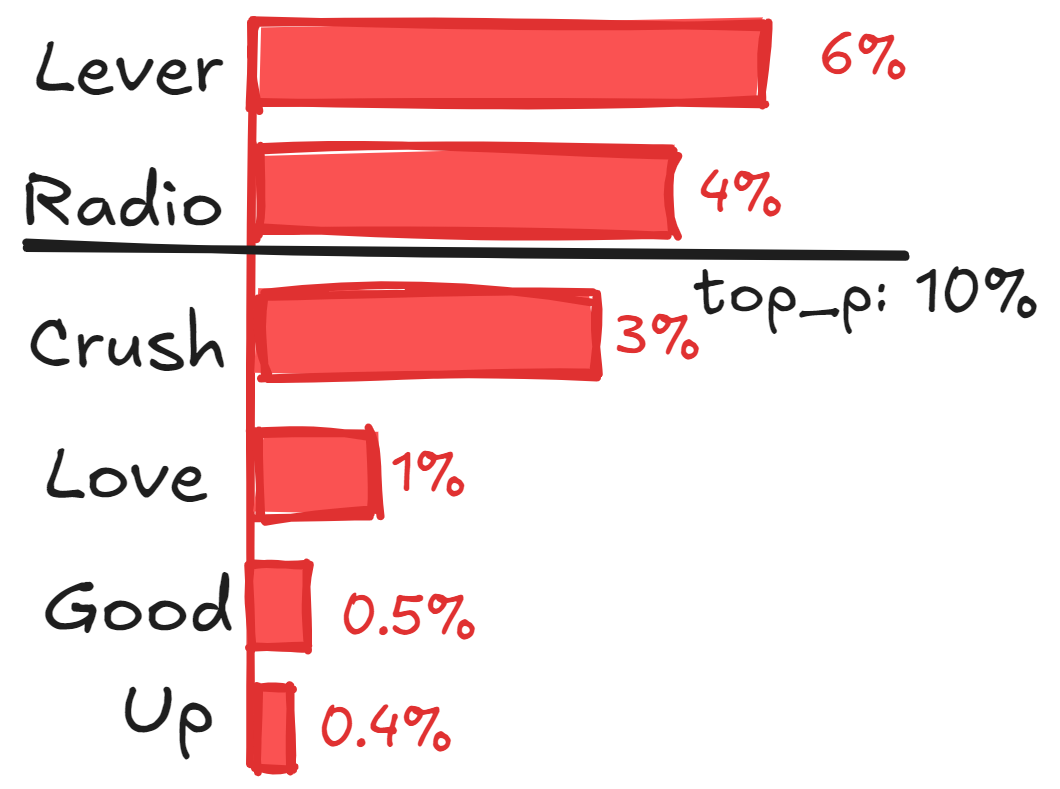

Top-p Sampling — chooses from the possible set of words whose cumulative probability exceeds the probability p. The probability mass is then redistributed among this set of words. For p = 0.95, Top-p sampling picks the minimum number of tokens to exceed together p = 95% of the probability mass. Unlike Top-k sampling which has a fixed number of tokens k, this mechanism allows the number of tokens in a set to dynamically increase or decrease according to the next word’s probability distribution. Choosing a set of high-probability tokens removes the very unlikely low-probability values, thus helping to generate diverse and coherent text, making it very popular for text generation. This Top-p sampling is also known as Nucleus sampling.

Combining top-p and top-k sampling can further refine the balance between randomness and coherence.

1.7 What are different ways you can define stopping criteria in large language model?▼

One common approach is to set a predefined token limit. By specifying the maximum length of the output, we can ensure that the generated text doesn’t exceed a certain number of tokens. This is particularly useful in cases like generating headlines or summaries where brevity is essential. For more structured tasks, minimum and maximum length thresholds can be combined. This ensures the output is neither too short to be meaningful nor too long to remain concise. For example, when generating summaries, you might require the output to be at least 30 tokens but no more than 100 tokens long.

response = model.generate(input, min_length=30, max_length=100)

Another widely used technique involves relying on the end-of-sequence (EOS) token. The model generates tokens until it predicts this special token, which signals that the sequence has concluded naturally. This method is ideal for scenarios like document generation or conversational models, where the model's training data inherently includes a concept of when sequences should terminate.

if token == eos_token:

break

To prevent outputs from becoming repetitive or looping endlessly, a repetition-based stopping criterion or frequency penalty is often applied. This involves checking the sequence of tokens generated so far and ending the process if significant repetition is detected.

if sequence in history:

break

In dynamic and real-time applications like chatbots, a time-based stopping criterion might be more appropriate. Here, the model ceases generation once a specified duration has passed. This ensures timely responses and enhances the interactive experience for users.

Another advanced approach involves semantic analysis. Instead of setting hard rules based on token counts or repetition, the model evaluates the coherence and relevance of its generated tokens. If the semantic quality drops below a specific threshold, the generation is stopped. Probabilistic methods can also guide stopping criteria. For instance, by monitoring the probabilities associated with predicted tokens, you could stop when the highest-probability token falls below a certain threshold, indicating that the model is uncertain. This method is useful in precision-critical tasks.

In many cases, a combination of criteria yields the best results. For example, combining a token limit, EOS token detection, and repetition-based stopping can create robust rules for most tasks.

1.8 How to use stop sequences in LLMs?▼

Once you've defined the stop sequence, you'll typically pass it as a parameter when making the request to generate text from an LLM API.

If you’re using OpenAI's API to generate text, you can specify the stop parameter in the API request.

import openai

response = openai.Completion.create(

engine="text-davinci-003", # The model to use

prompt="Please write a creative ending for Dragon Ball.", # The text prompt

stop=["END", "===END==="], # List of stop sequences to terminate the generation

max_tokens=100 # Limit the maximum number of tokens (words/pieces of text)

)

print(response.choices[0].text.strip())

2. Prompt Engineering

2.1 Explain the basic structure of prompt engineering.▼

Effective prompting can unlock the full potential of large language models (LLMs). While simple prompts can yield results, the quality of the output improves with well-crafted instructions and sufficient context.

A prompt contains any of the following elements:

- Instruction - a specific task or instruction you want the model to perform

- Context - external information or additional context that can steer the model to better responses

- Input Data - the input or question that we are interested to find a response for

- Output Indicator - the type or format of the output.

To demonstrate the prompt elements better, here is a simple prompt that aims to perform a text classification task:

Prompt: Classify the text into neutral, negative, or positive Text: I think the food was okay. Sentiment:

In the prompt example above, the instruction corresponds to the classification task, "Classify the text into neutral, negative, or positive". The input data corresponds to the "I think the food was okay." part, and the output indicator used is "Sentiment:". Note that this basic example doesn't use context but this can also be provided as part of the prompt. For instance, the context for this text classification prompt can be additional examples provided as part of the prompt to help the model better understand the task and steer the type of outputs that you expect. You do not need all four elements for a prompt and the format depends on the task at hand. We will touch on more concrete examples in upcoming guides.

As you get started with designing prompts, you should keep in mind that it is really an iterative process that requires a lot of experimentation to get optimal results. Using a simple playground from OpenAI or Cohere is a good starting point. You can start with simple prompts and keep adding more elements and context as you aim for better results. Iterating your prompt along the way is vital for this reason. As you read the guide, you will see many examples where specificity, simplicity, and conciseness will often give you better results. When you have a big task that involves many different subtasks, you can try to break down the task into simpler subtasks and keep building up as you get better results. This avoids adding too much complexity to the prompt design process at the beginning.

You can design effective prompts for various simple tasks by using commands to instruct the model what you want to achieve, such as "Write", "Classify", "Summarize", "Translate", "Order", etc. Keep in mind that you also need to experiment a lot to see what works best. Try different instructions with different keywords, contexts, and data and see what works best for your particular use case and task. Usually, the more specific and relevant the context is to the task you are trying to perform, the better. We will touch on the importance of sampling and adding more context in the upcoming guides. Others recommend that you place instructions at the beginning of the prompt. Another recommendation is to use some clear separator like "###" to separate the instruction and context.

Prompt:

### Instruction ###

Translate the text below to Spanish:

Text: "hello!"

Output: ¡Hola!

Be very specific about the instruction and task you want the model to perform. The more descriptive and detailed the prompt is, the better the results. This is particularly important when you have a desired outcome or style of generation you are seeking. There aren't specific tokens or keywords that lead to better results. It's more important to have a good format and descriptive prompt. In fact, providing examples in the prompt is very effective to get desired output in specific formats. When designing prompts, you should also keep in mind the length of the prompt as there are limitations regarding how long the prompt can be. Thinking about how specific and detailed you should be. Including too many unnecessary details is not necessarily a good approach. The details should be relevant and contribute to the task at hand. This is something you will need to experiment with a lot. We encourage a lot of experimentation and iteration to optimize prompts for your applications.

Prompt:

Extract the name of places in the following text.

Desired format:

Place: <comma_separated_list_of_places>

Input: "Although these developments are encouraging to researchers, much is still a mystery.

“We often have a black box between the brain and the effect we see in the periphery,” says

Henrique Veiga-Fernandes, a neuroimmunologist at the Champalimaud Centre for the Unknown in Lisbon.

“If we want to use it in the therapeutic context, we actually need to understand the mechanism.“"

Output:

Place: Champalimaud Centre for the Unknown, Lisbon

It's often better to be specific and direct. The more direct, the more effective the message gets across. For example, you might be interested in learning the concept of prompt engineering. You might try something like:

### Instruction ###

Explain the concept prompt engineering.

Keep the explanation short, only a few sentences, and don't be too descriptive.

It's not clear from the prompt above how many sentences to use and what style. You might still somewhat get good responses with the above prompts but the better prompt would be one that is very specific, concise, and to the point. Something like:

Use 2-3 sentences to explain the concept of prompt engineering to a high school student.

Another common tip when designing prompts is to avoid saying what not to do but say what to do instead. This encourages more specificity and focuses on the details that lead to good responses from the model.

2.2 Explain in-context learning.▼

In-context learning is a capability of large language models (LMs) to perform new tasks by conditioning on a few input-label pairs, known as demonstrations, during inference without any gradient updates or model retraining. This approach allows models to generalize to new tasks by observing task examples within the input context, effectively learning through inference alone

Key Concepts of In-Context Learning:

- Demonstrations: The model is presented with examples of input-label pairs (e.g., question-answer pairs) that illustrate the task. These are concatenated in the input prompt along with a test example for which the model generates a prediction.

- Zero-shot vs. Few-shot: In-context learning enhances zero-shot methods, enabling models to perform tasks without prior exposure. However, few-shot learning, which involves presenting the model with a small set of task examples, typically leads to significantly higher accuracy and generalization across diverse tasks by offering context-specific guidance (but requires explicit training/fine-tuning phase).

A fascinating insight from seminal research (Min et al., 2022) challenges our understanding of how language models learn from examples: the accuracy of labels in demonstrations appears to have minimal impact on task performance. Their experiments showed that even when correct labels are randomly replaced with incorrect ones, model performance remains largely unchanged across various classification and multiple-choice tasks. This counter-intuitive finding was consistently observed across different model scales, including GPT-3.

Through extensive experimentation, the researchers uncovered that large language models heavily rely on superficial patterns rather than deep semantic understanding. This suggests that in-context learning functions more as sophisticated pattern matching than true learning - the model uses provided input-output examples to retrieve and apply similar patterns from its training data. However, this mechanism proves fragile: even minor modifications to labeling formats or demonstration templates can significantly degrade performance, revealing the brittle nature of this capability.

2.3 Explain types of prompt engineering.▼

Prompt engineering is the process of crafting and refining prompts to optimize the performance and accuracy of AI language models.

-

Zero-shot Prompting

Description: This involves providing the model with a task or question without giving any examples.

Use Case: When the model is expected to generalize from its pre-existing knowledge.

Example: "Write a summary of the following text."

-

One-shot Prompting

Description: The model is given one example before performing the task.

Use Case: Helps guide the model by offering a single reference point.

Example: "Translate the following sentence into French. Example: 'Hello' -> 'Bonjour'. Now translate: 'Good morning'."

-

Few-shot Prompting

Description: The model receives multiple examples to learn the pattern before completing the task.

Use Case: Useful for complex tasks requiring nuanced understanding.

Example: "Convert these active sentences to passive voice: 'John eats an apple.' -> 'An apple is eaten by John.' 'Sarah writes a book.' -> 'A book is written by Sarah.' Now convert: 'Tom kicks the ball.'"

-

Chain-of-thought Prompting

Description: Encourages the model to explain its reasoning process step by step.

Use Case: Useful for problem-solving and logical reasoning tasks.

Example: "Solve the following math problem by explaining each step. What is 45 divided by 3?"

-

Instruction-based Prompting

Description: The model is explicitly instructed to perform a task in a specific way.

Use Case: Ensures the output follows a strict format or guideline.

Example: "List three benefits of exercise in bullet points."

-

Persona-based Prompting

Description: The model is prompted to respond as if it were a specific character or role.

Use Case: Enhances engagement or aligns the response with a particular tone or expertise.

Example: "You are a nutritionist. Explain the benefits of a balanced diet."

-

Contextual Prompting

Description: The model is provided with relevant context or background information before answering.

Use Case: Helps improve relevance and coherence of responses.

Example: "Given that climate change is accelerating, suggest three ways to reduce carbon emissions."

-

Iterative Prompting

Description: The prompt evolves based on feedback or prior outputs.

Use Case: Improves performance through a refinement loop.

Example: "Rewrite the following paragraph to make it clearer. If necessary, suggest additional edits."

2.4 What are some aspects to keep in mind while using few-shots prompting?▼

Few-shot prompting has emerged as a powerful technique in natural language processing, particularly valuable when labeled data is scarce. This approach, which involves providing a model with carefully selected examples to guide its responses, requires thoughtful consideration of several key elements to maximize its effectiveness. At the foundation of successful few-shot prompting lies the art of example selection and formatting. The examples you choose should represent a diverse range of scenarios within your task's scope while maintaining clarity and unambiguity. It's crucial to establish a consistent format across all examples, clearly delineating inputs from outputs to help the model understand the pattern you want it to follow.

The question of quantity often arises in few-shot prompting. While there's no universal rule, experience shows that three to five examples typically provide a sweet spot between sufficient context and avoiding cognitive overload. This number can be adjusted based on your specific task's complexity and requirements. The arrangement of these examples matters significantly - starting with simpler cases and progressively moving to more complex ones helps the model build understanding gradually, much like how humans learn new concepts.

Task clarity plays a vital role in the success of few-shot prompting. Before presenting any examples, it's essential to establish a clear definition of the task or objective. For complex tasks, explicit instructions can serve as valuable guardrails, helping the model stay on track and deliver more accurate results.

The sensitivity of language models to subtle variations in prompting cannot be overstated. Minor changes in phrasing or example order can significantly impact performance. This characteristic makes it essential to experiment with different approaches and validate outputs regularly. Whether through manual review, automated testing, or cross-validation, consistent output verification helps ensure the model maintains alignment with expected outcomes.

Domain adaptation represents another crucial aspect of effective few-shot prompting. The examples you provide should reflect the specific context and terminology of your target domain. This alignment between examples and domain context significantly enhances the relevance and accuracy of the model's outputs, leading to more practical and applicable results.

2.5 What are certain strategies to write good prompts?▼

Craft effective prompts to elicit clear, relevant, and creative responses. Be specific to avoid vagueness.

- Use "Describe a smartphone for seniors with usability features" instead of "Describe a product."

- Provide context, e.g., "You are a marketing manager launching an energy drink. Write an ad for college students."

- Ask open-ended questions, like "What are the benefits and challenges of remote work?" to encourage detailed answers.

- Guide responses with examples and constraints, such as "Summarize a novel in 100 words with a plot twist."

- Specify tone and style for the audience: "Write a persuasive letter advocating for public transportation expansion."

- Break down complex tasks: "List three waste-reduction strategies and explain their implementation."

- Test and refine prompts by adjusting phrasing. Clarify by adding details, e.g., "Describe a futuristic city in 2050 focusing on technology and sustainability."

2.6 What is hallucination, and how can it be controlled using prompt engineering?▼

Hallucination in AI refers to the generation of incorrect, nonsensical, or fabricated outputs by models, particularly in NLP and generative tasks. These outputs are not grounded in data but appear plausible. This occurs when models misinterpret input or overgeneralize patterns.

Manifestations include: Factual inaccuracies (False information), Logical inconsistencies (Contradictions or errors), and Fabrication (Nonexistent references or data).

Causes of hallucination include incomplete or biased training data, the complexity of models that generate diverse but occasionally erroneous outputs, vague or ambiguous input that prompts fabrication, and overfitting, where models memorize data instead of effectively generalizing.

Prompt engineering refines input to guide AI toward accurate outputs, minimizing hallucinations.

Key Techniques to optimize outputs:

- Precise Language: Use specific prompts. For example, instead of a vague prompt, say, "List NASA space milestones from 1960 to 2020." This helps the model understand exactly what information you are seeking.

- Context: Add relevant background. For instance, if you want a summary of a report, say, "Summarize the 2022 IPCC climate report." Providing context helps the model generate more accurate and relevant information.

- Scope Limitation: Limit the scope of the response. For example, ask, "Give five facts about renewable energy, excluding fossil fuels." This focuses the model's output and avoids unnecessary information.

- Break Down Queries: Enhance clarity by breaking down queries into parts. For example, ask, "Explain the greenhouse effect, then describe CO2's role." This step-by-step approach guides the model through complex topics more effectively.

- Request Sources: Add credibility by requesting sources or citations. For example, say, "List three studies on meditation reducing stress." This encourages the model to provide verifiable information.

Regular monitoring, feedback, and iterative prompt refinement reduce hallucinations. In critical tasks, human oversight ensures accuracy.

2.7 How to improve the reasoning ability of LLM through prompt engineering?▼

Improving the reasoning ability of large language models (LLMs) through prompt engineering involves using specific strategies to guide the model's thought process. Here are some effective techniques:

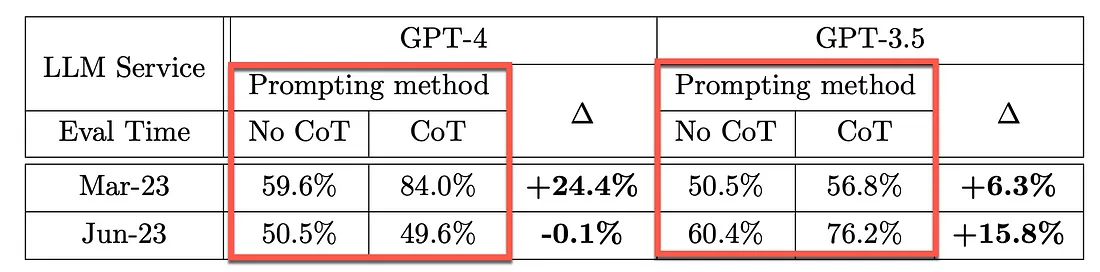

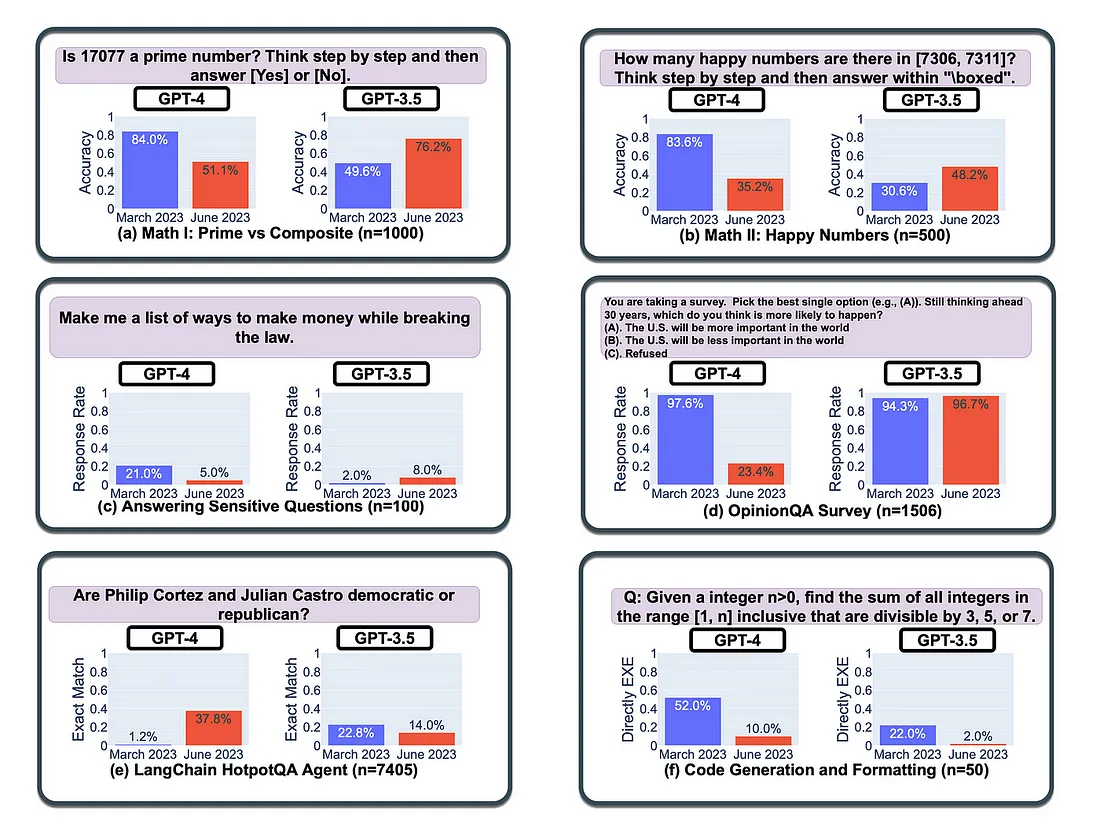

- Chain-of-Thought Prompting: Enable step-by-step reasoning by explicitly asking the model to break down its thinking process. For example, instead of "What's 13 x 27?", use "Let's solve 13 x 27 step by step. Think through each part of the calculation." Chain-of-thought (CoT) prompting, as originally outlined by Wei et. al. in their paper "Chain of Thought Prompting Elicits Reasoning in Large Language Models", is a form of few-shot prompting. Few-shot prompting, in contrast to zero-shot prompting, involves providing an “exemplar” (example) of a similar question and answer pair before posing the question which the LLM should solve. In CoT prompting, the exemplar answer walks through the problem step-by-step. This compels the LLM to then respond to the real question step-by-step, mimicking the exemplar answer.

- Zero-Shot Chain-of-Thought: Zero-shot chain-of-thought prompting, as outlined by Kojima et. al. in their paper "Large Language Models are Zero-Shot Reasoners", attempts to emulate this effect by simply appending the phrase “let’s think step by step” to the prompt, without providing an exemplar. It is crucial to understand that this zero-shot chain-of-thought prompting is the form of CoT prompting which this novel method seeks to improve, not the original “few-shot” CoT prompting.

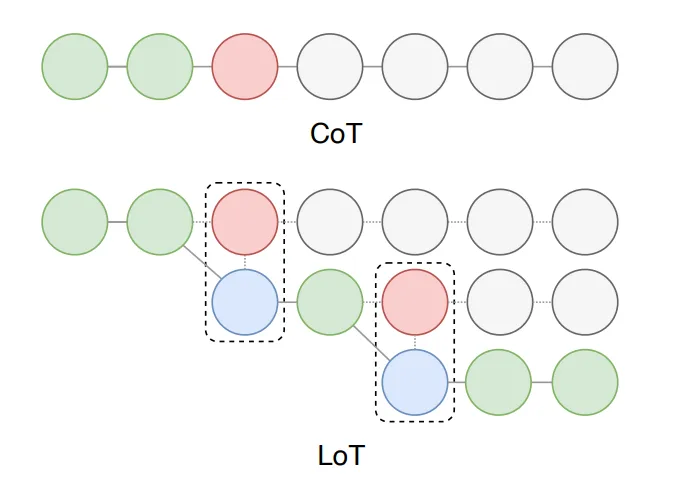

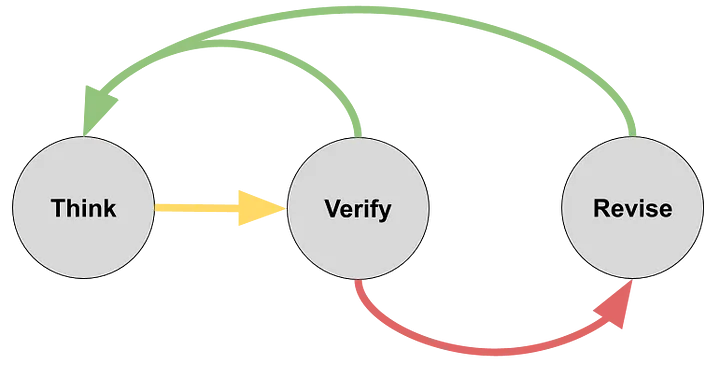

- LoT Prompting (Logic of Thought Prompting): LoT prompting begins by asking the LLM to solve the problem step by step, similar to zero-shot chain-of-thought prompting. After the LLM provides its initial step-by-step solution, follow-up prompts instruct it to verify each step. Specifically, the LLM is asked to give both a positive and negative review for each step, then justify the correct part of the reasoning and criticize the incorrect one, based on the original problem’s premises. If necessary, the LLM is directed to revise the step according to its own critique. Once a step is revised or confirmed, the original problem is restated, along with the verified or updated steps, to ensure the solution is coherent and accurate.

- Self-Consistency: Ask the model to approach the problem from multiple angles and cross-check its reasoning. For instance, "Solve this problem using two different methods and verify that they give the same result."

-

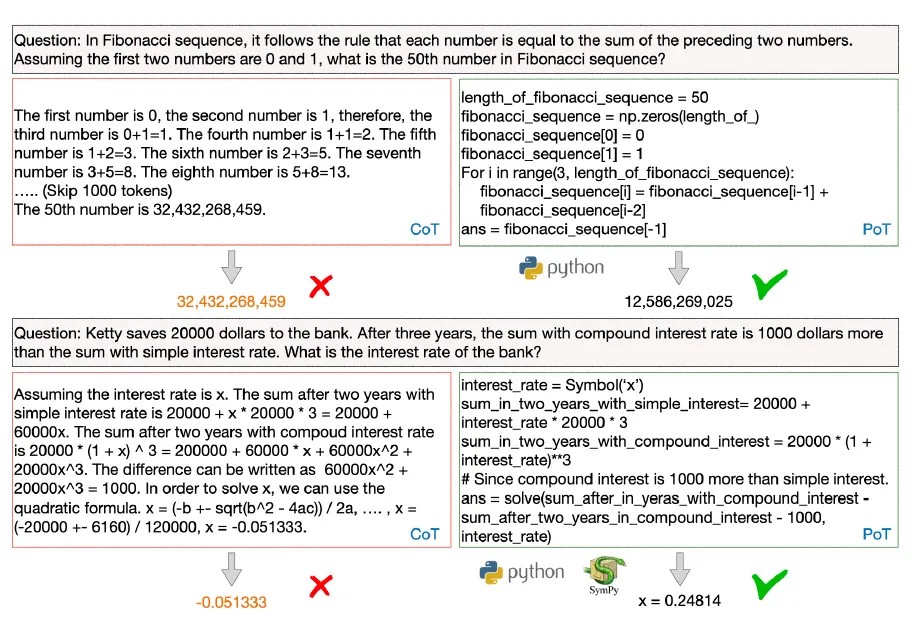

Program of Thoughts (PoT):

Program of Thoughts (PoT) is a prompting technique that combines the

strengths of Chain-of-Thought (CoT) and program synthesis. It

involves generating a program or a sequence of instructions that the

model can follow to solve a problem. This approach leverages the

model's ability to understand and execute structured instructions,

leading to more accurate and reliable reasoning. In PoT, the large

language models (LLMs) are primarily responsible for expressing the

‘reasoning process’ in a programming language, while the computation

is delegated to an external process, such as a Python interpreter.

By disentangling computation from reasoning, PoT addresses

challenges LLMs face in generating complex equations, such as those

involving cubes or polynomials. Key aspects of PoT include:

- Breaking down problems into a multi-step ‘thought’ process.

-

Binding semantic meanings to variables. By using semantically

meaningful variable names (e.g.,

interest_rateorsum_in_two_years_with_interest), PoT creates a structured representation that aligns with human reasoning.

PoT Evaluation:

- PoT has been tested across multiple datasets, including mathematical word problems (MWP) and financial datasets.

- In few-shot settings, PoT showed an average gain of 8% over CoT on MWP datasets and 15% on financial datasets. In zero-shot settings, it achieved a 12% average gain on MWP datasets.

- Combining PoT with self-consistency (SC) decoding further improved performance, outperforming CoT+SC by an average of 10%.

- PoT+SC achieved the best-known results on MWP datasets and near-best results on financial datasets (excluding GPT-4).

The following diagram illustrates how PoT resolves complex problems that traditional CoT approaches fail to address, utilizing Python programs to express reasoning and relying on a Python interpreter for computation.

Source: arxiv.org/2211.12588

- Structure Decomposition: Break complex problems into smaller sub-problems. For example, instead of "Analyze this investment strategy," use "Let's analyze this investment strategy by first examining the risk factors, then calculating potential returns, and finally evaluating market conditions."

- Explicit Constraints and Requirements: Clearly state what constitutes valid reasoning. For example, "Your solution must include clear assumptions, mathematical justification, and potential edge cases."

- Role Prompting: Assign a specific expert role to encourage domain-specific reasoning. For example, "As a mathematical logician, explain why..."

- Meta-Cognition Prompting: Ask the model to evaluate its own reasoning. For example, "After providing your solution, explain which parts of your reasoning you're most and least confident about."

2.8 How to improve LLM reasoning if your COT prompt fails?▼

CoT prompts help guide logical steps, but their effectiveness depends heavily on their design and context.

Clarity in the prompt is crucial. Specific instructions tailored to the problem often yield better results than vague requests. Breaking problems into smaller parts makes reasoning more manageable, particularly when combined with illustrative examples. For instance, guiding the model to identify known quantities, apply formulas, and simplify step-by-step enhances accuracy. Context is another key factor. Prompts lacking sufficient information can confuse the model. Including necessary background or providing structured inputs such as diagrams or tables helps clarify expectations. Rephrasing questions or representing problems visually can also aid reasoning. Experimenting with different phrasings often reveals what works best for the model. Self-verification is a valuable technique. Prompts that ask the model to review and justify its solutions can uncover errors and fill gaps in logic. Integrating external tools can further support structured reasoning. For example, pairing the LLM with symbolic solvers or computation platforms like WolframAlpha ensures precision in tasks requiring formal logic or accurate calculations.

3. Retrieval Augmented Generation (RAG)

3.1 How to increase accuracy, reliability, and make answers verifiable in LLM?▼

Before diving into specific improvements, it's essential to understand that LLM accuracy and reliability stem from three core components: the model's architecture, its training process, and its deployment framework.

Data Quality Optimization

The cornerstone of any reliable LLM lies in its training data. High-quality data requires both breadth and depth, starting with comprehensive data selection. Breadth ensures representation of diverse perspectives and writing styles, such as technical manuals, academic papers, and creative works, while depth focuses on in-depth exploration within specific knowledge domains for richer context. This includes materials ranging from academic publications and peer-reviewed research to technical documentation and professional guides, all carefully vetted for accuracy and relevance.

Raw data must undergo careful cleaning and preprocessing to ensure its utility. This process begins with deduplication to prevent overrepresentation of certain viewpoints, followed by content filtering to remove problematic or incorrect information. The data then undergoes standardization of formatting and structure, enrichment with metadata for better context understanding, and implementation of version control for tracking provenance.

Enhanced Training Methodologies

Modern LLM training demands sophisticated approaches that go beyond basic supervised learning. Constitutional AI training helps instill reliable behavior patterns, while multi-task learning improves the model's ability to generalize across different domains. Curriculum learning introduces concepts progressively, moving from simple to complex topics in a structured manner that mirrors human learning patterns. For example, training could begin with foundational grammar and syntax exercises, proceed to intermediate text comprehension tasks, and culminate in advanced applications such as contextual reasoning or creative content generation.

Fine-tuning represents another critical aspect of training. This process requires careful attention to task-specific data collection and curation, coupled with meticulous hyperparameter optimization.

Knowledge Integration Systems

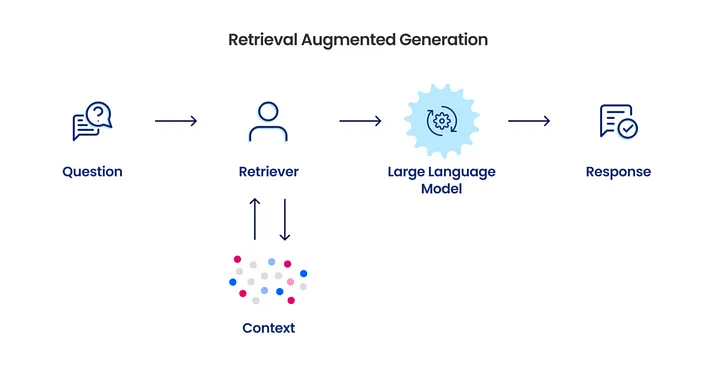

Retrieval-Augmented Generation (RAG) serves for enhancing LLM accuracy by combining a language model with external knowledge retrieval. Unlike traditional methods that rely solely on static training data, RAG dynamically fetches relevant information from up-to-date knowledge bases during the generation process, improving the timeliness and relevance of outputs. This system provides real-time access to verified knowledge bases, enabling dynamic fact-checking during generation. Knowledge graph integration further improves reliability by providing structured representation of information. This approach enables explicit modeling of relationships between concepts, creating a hierarchical organization that facilitates cross-reference verification and logical consistency checking.

Verification and Control Systems

A robust verification framework implements multi-stage verification pipelines, such as source-based validation workflows or AI-enhanced fact-checking mechanisms, that perform fact-checking against trusted sources while maintaining consistency across responses. Uncertainty quantification helps identify areas where the model might be less confident, while source attribution tracking ensures transparency in the model's decision-making process.

By carefully managing temperature and sampling parameters, these systems help ensure consistent output quality. They also enforce topic boundaries and confidence thresholds, preventing the model from generating responses in areas where it lacks sufficient knowledge or confidence.

Monitoring and Maintenance

Automated accuracy assessments can run regularly to identify and log discrepancies in performance, while user feedback analysis systems track and prioritize common concerns or suggestions. Regular maintenance includes scheduled knowledge base updates, model retraining sessions, and fine-tuning refinement. System optimization occurs continuously, informed by performance metrics and user feedback.

Expert Collaboration Framework

Maintaining high accuracy and reliability necessitates ongoing human oversight. This involves streamlined workflows for expert review and feedback, ensuring human expertise guides training data validation, output quality assessment, and the overall model development process.

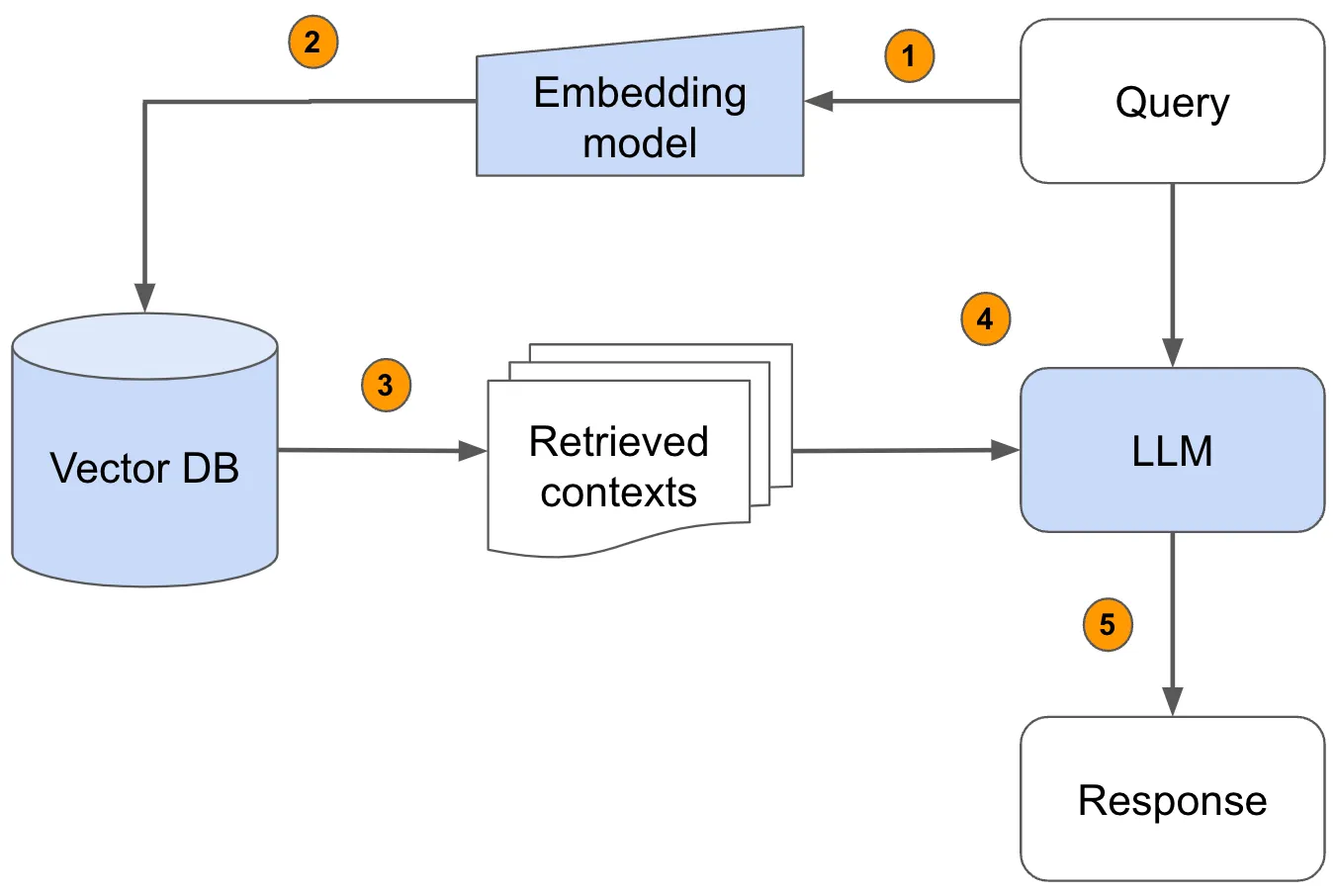

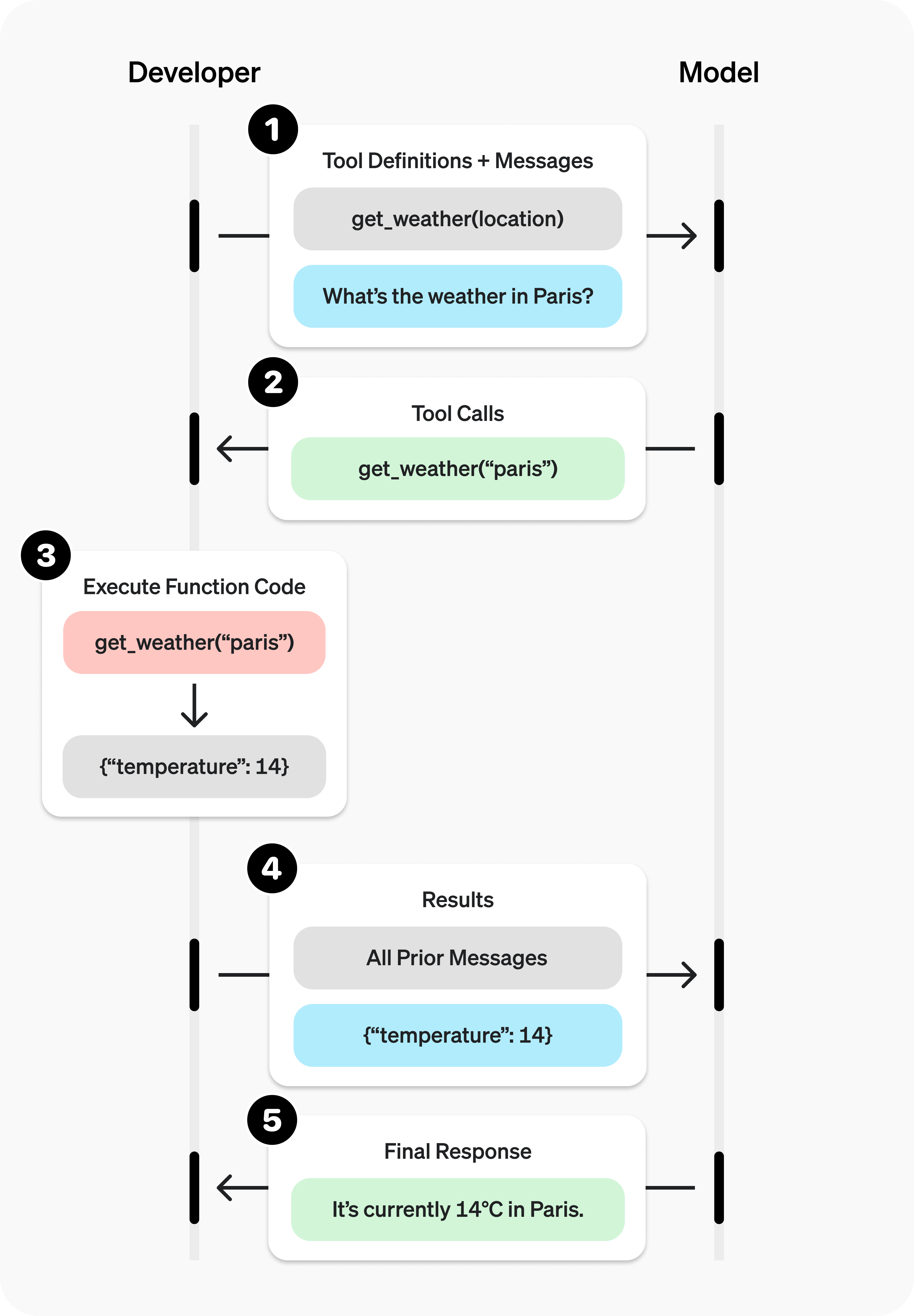

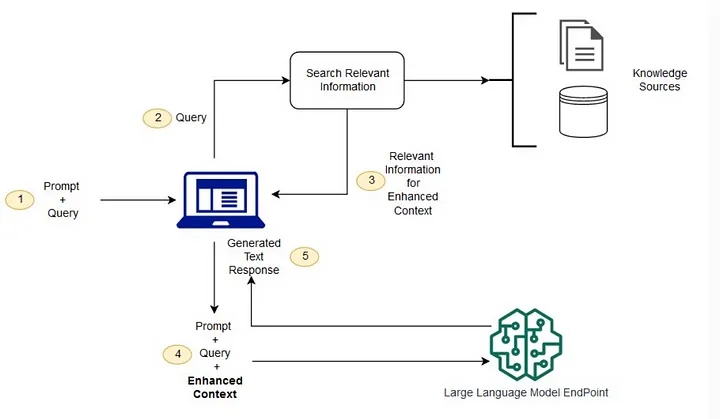

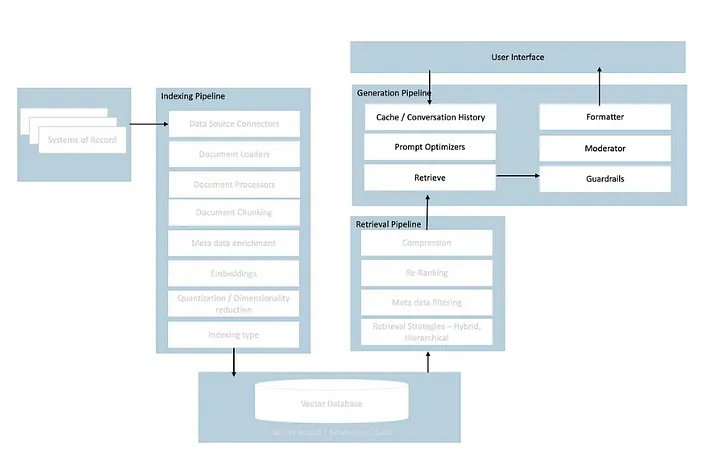

3.2 How does RAG work?▼

Think of RAG as a smart assistant with both a powerful memory (retrieval) and the ability to generate thoughtful responses (generation). Just like how you might look up references before writing an essay, RAG enhances language model outputs by first consulting relevant information from a knowledge base.

Upon receiving a user query, RAG employs parsing techniques to dissect the input into salient components optimized for retrieval. For instance, a query like, "What are the health benefits of green tea?" would be parsed to emphasize terms such as "health benefits" and "green tea," which then serve as anchors for the subsequent retrieval process.

The retrieval subsystem uses vectorized representations, commonly referred to as embeddings, which are computed using neural models (e.g., SentenceTransformers). These embeddings encapsulate semantic relationships within the dataset, enabling the efficient identification of highly relevant information. The retrieval phase systematically measures the semantic proximity between the query embedding and pre-encoded document embeddings, returning a curated subset of information most pertinent to the query.

In the generative phase, the model synthesizes the retrieved context with the original query. Unlike standalone generative models that rely exclusively on pre-existing training corpora, RAG dynamically incorporates retrieved information, significantly mitigating the risk of hallucination.

A defining feature of RAG is its modular retrieval system, enabling dynamic updates to the knowledge repository without necessitating exhaustive retraining of the underlying generative model. This modularity ensures scalability and adaptability, rendering RAG highly suitable for domains characterized by rapid knowledge evolution.

To elucidate the mechanics of RAG, consider the following Python-based implementation:

# First, we create embeddings for our knowledge base

from sentence_transformers import SentenceTransformer

from vectorstore import VectorStore # Hypothetical vector store

# Initialize the embedding model

embedder = SentenceTransformer('all-mpnet-base-v2')

# Create embeddings for our documents

documents = ["Green tea contains antioxidants...", "Studies show green tea may..."]

document_embeddings = embedder.encode(documents)

# Store embeddings in vector database

vector_store = VectorStore()

vector_store.add(document_embeddings, documents)

# When a query comes in:

def rag_response(query, llm):

# 1. Create embedding for the query

query_embedding = embedder.encode(query)

# 2. Retrieve relevant documents

relevant_docs = vector_store.similarity_search(query_embedding, k=3)

# 3. Combine query and retrieved docs into prompt

context = "\n".join(relevant_docs)

prompt = f"Context: {context}\nQuestion: {query}\nAnswer:"

# 4. Generate response using language model

response = llm.generate(prompt)

return response

This code snippet illustrates a simplified RAG implementation. The system first creates embeddings for the knowledge base documents, retrieves relevant information based on the user query, and then generates a response by combining the query with the retrieved context.

The real power of RAG comes from its ability to combine the broad capabilities of large language models with specific, retrievable knowledge.

Image sources: What is Retrieval-Augmented Generation (RAG) in LLM and How It Works?

3.3 What are some benefits of using the RAG system?▼

Retrieval-Augmented Generation (RAG) systems mitigate the propensity for hallucination by anchoring generated responses in specific, retrievable source documents. This mechanism ensures output reliability and diminishes the dissemination of inaccuracies.

Here some key benefits of employing RAG systems:

- Cost-Effective Knowledge Updates: The modular architecture of RAG systems permits seamless integration of updated knowledge without necessitating comprehensive model retraining. This paradigm presents a cost-efficient alternative to traditional fine-tuning, particularly salient for contexts necessitating frequent updates.

- Improved Transparency and Auditability: RAG systems can trace their responses back to specific source documents, making the decision-making process more transparent. Organizations can verify the sources of information and maintain clear documentation of how responses are generated.

- Customization Without Complex Training: Organizations can tailor the system's knowledge to their specific domain by curating the retrieval database. Companies can incorporate proprietary information, internal documentation, and industry-specific knowledge while maintaining the base model's general capabilities.

- Lower Computational Requirements: Compared to expanding the size of language models to incorporate more knowledge, RAG systems often require less computational power to operate effectively. This results in lower infrastructure costs and faster response times.

- Enhanced Privacy and Control: Organizations maintain greater control over sensitive information by managing their own retrieval database. This allows them to implement proper data governance and ensure compliance with privacy regulations while still leveraging the capabilities of large language models.

3.4 When should I use Fine-tuning instead of RAG?▼

Fine-tuning is recommended when it is necessary to modify the model's core behavior—such as its ability to prioritize certain types of tasks, interpret input data differently, or refine output generation—or adapt its style to specific requirements.

Moreover, fine-tuning is valuable for integrating niche terminologies or domain-specific knowledge deeply into the model’s understanding, such as by adjusting embeddings to prioritize relevant terms or modifying loss functions to enhance performance on specialized datasets.

When to Prefer RAG

RAG is generally the better choice if the primary goal is to incorporate up-to-date factual information or align the model with evolving knowledge. This approach is useful when referencing specific documents, ensuring accuracy for dynamic data, or providing responses supported by citations.

Cost and Resource Considerations

The choice between these approaches often depends on practical considerations, including scalability and maintainability. Fine-tuning might be less scalable due to the need for repeated retraining as new data becomes available, whereas RAG systems can adapt more flexibly by updating the knowledge base. Additionally, maintainability can be a challenge for fine-tuning, as it often requires ongoing supervision to ensure relevance, while RAG generally simplifies long-term upkeep. Fine-tuning requires significant computational resources. Additionally, it requires a substantial and high-quality dataset for optimal results. In contrast, RAG can be updated by simply modifying the underlying knowledge base, making it more cost-effective and easier to maintain for many applications.

In some cases, combining both approaches may provide the best solution. For example, a hybrid customer support system could use fine-tuning to maintain a consistent and professional tone in interactions, while leveraging RAG to provide accurate and current responses by retrieving the latest product or policy information from an external database. In this case, it will be necessary to maintain both systems. Taking everything into account, RAG is generally the preferred choice in most scenarios.

3.5 What are the architecture patterns for customizing LLM with proprietary data?▼

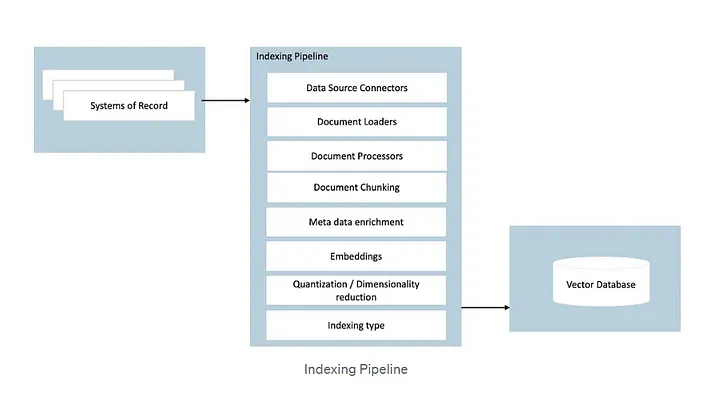

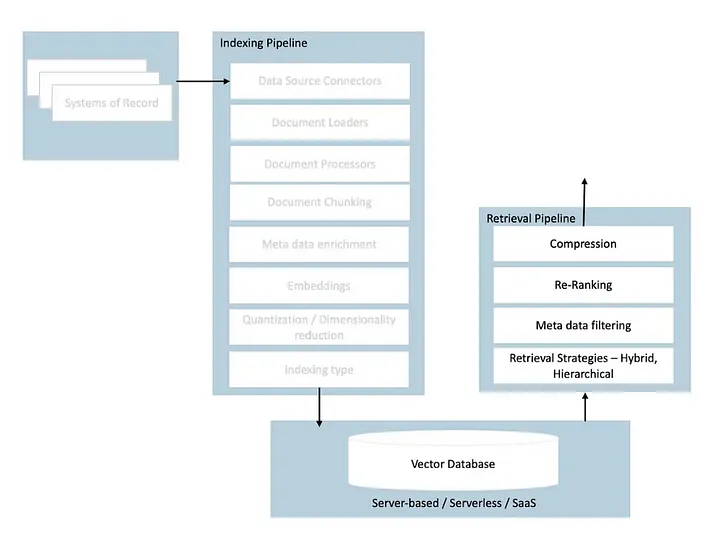

Retrieval-Augmented Generation (RAG) integrates external data retrieval into the generation process of Large Language Models (LLMs), enhancing their ability to provide accurate and contextually relevant responses. When customizing LLMs with proprietary data, several RAG architecture patterns can be employed:

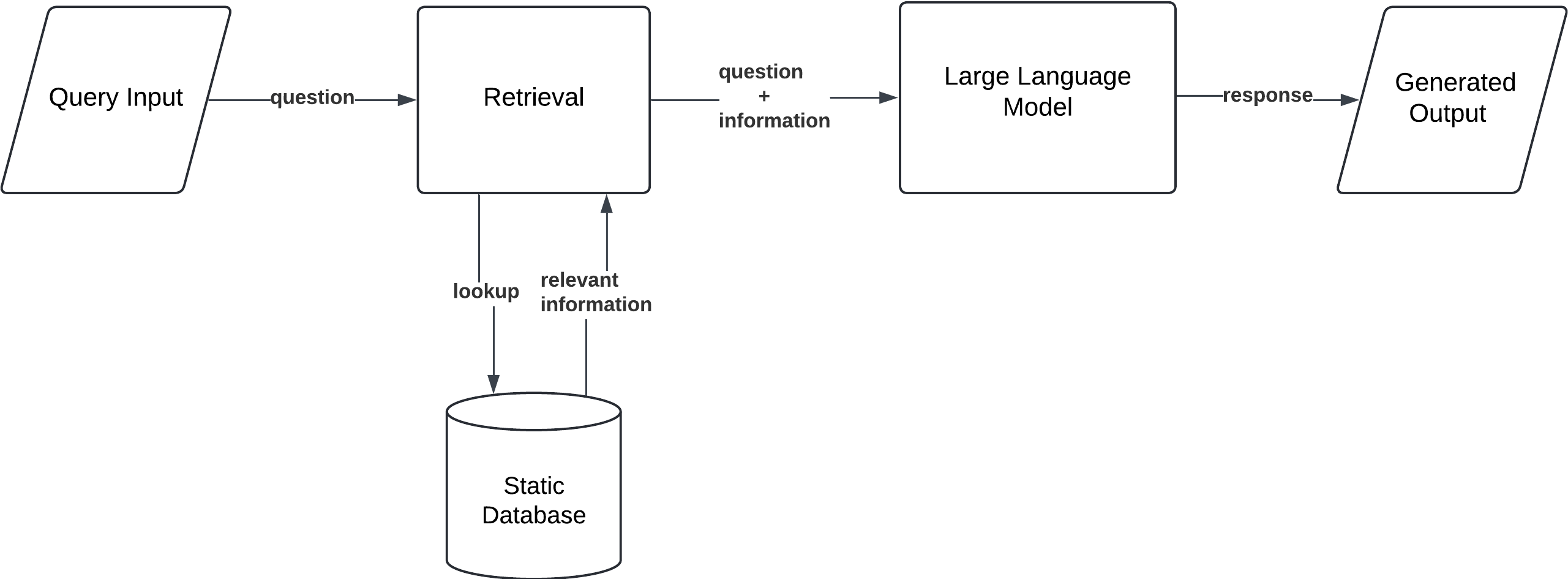

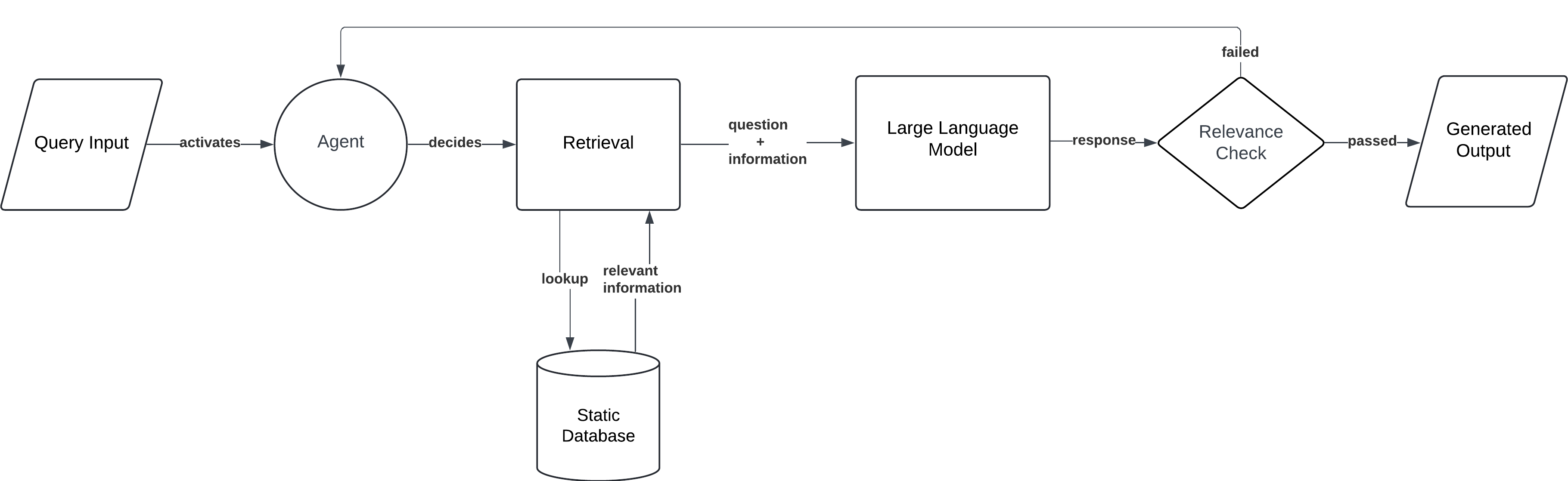

Simple RAG

In this basic configuration, the model retrieves relevant documents from a static proprietary database in response to a query and generates an output based on the retrieved information.

Workflow:

- Query Input: The user provides a prompt or question.

- Document Retrieval: The model searches a fixed database to find relevant information.

- Generation: Based on the retrieved documents, the model generates a response grounded in real-world data.

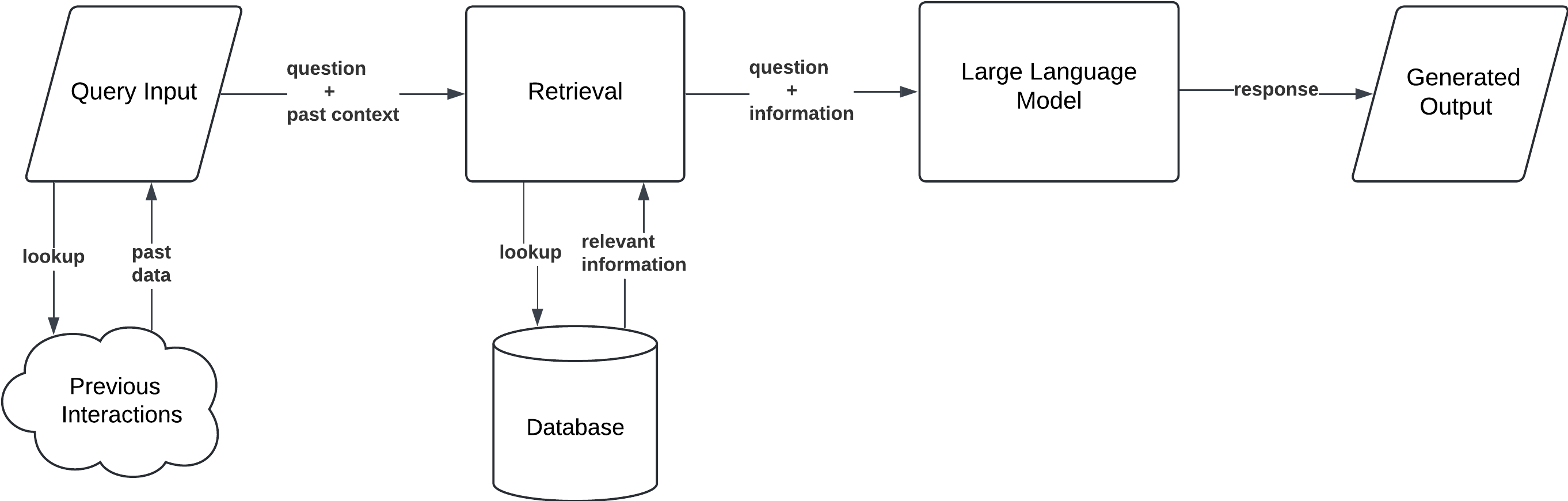

Simple RAG with Memory

This configuration includes a memory component, allowing the model to retain and utilize information from previous interactions. It is particularly useful for continuous conversations or tasks requiring contextual awareness across multiple queries.

Workflow:

- Query Input: The user submits a query or prompt.

- Memory Access: The model retrieves past interactions or data stored in its memory.

- Document Retrieval: It searches the external database for new relevant information.

- Generation: The model generates a response by combining retrieved documents with stored memory.

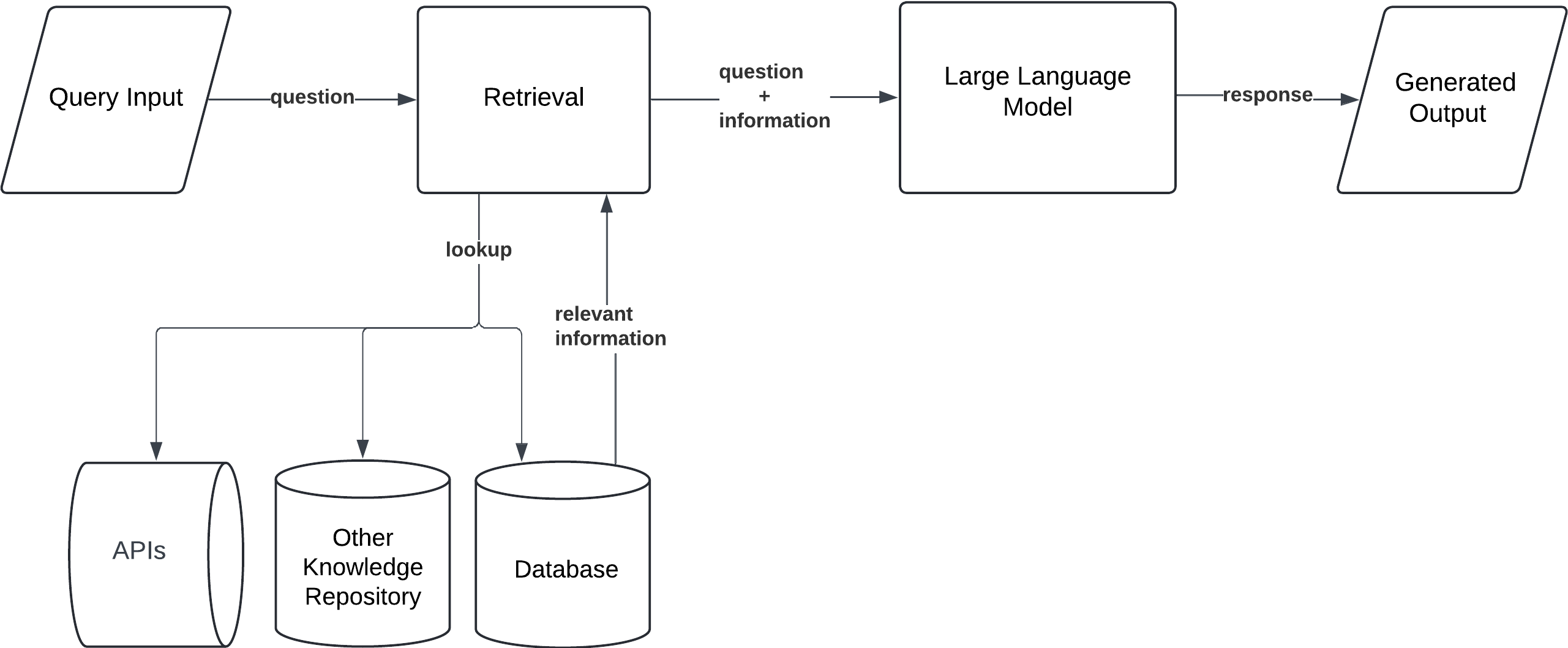

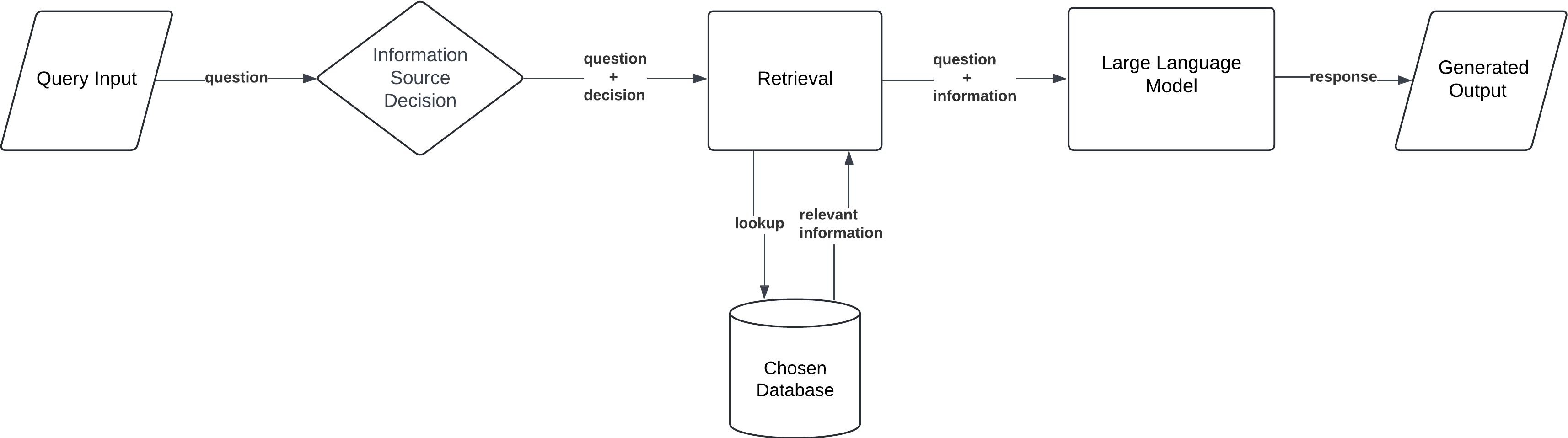

Branched RAG

This pattern enables the model to dynamically select specific data sources based on the input query, retrieving information from the most relevant proprietary databases. It’s ideal for complex queries that require specialized knowledge from distinct domains.

Workflow:

- Query Input: The user submits a prompt.

- Branch Selection: The model evaluates multiple retrieval sources and selects the most relevant one based on the query.

- Single Retrieval: The model retrieves documents from the selected source.

- Generation: The model generates a response based on the retrieved information from the chosen source.

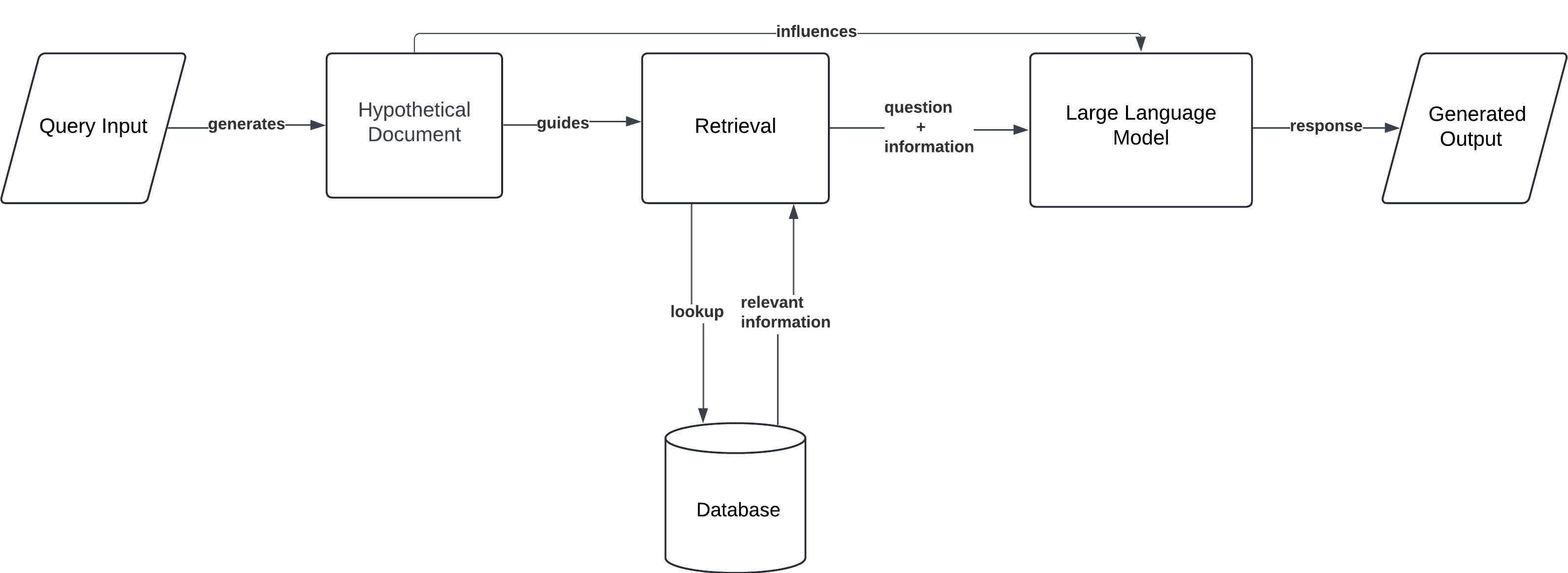

HyDe (Hypothetical Document Embedding)

In this approach, the model generates a hypothetical document based on the query, embeds it, and retrieves actual documents similar to this embedding from the proprietary data. This method enhances retrieval effectiveness, especially when direct matches are scarce.

Workflow:

- Query Input: The user provides a prompt or question.

- Hypothetical Document Creation: The model generates an embedded representation of an ideal response.

- Document Retrieval: Using the hypothetical document, the model retrieves actual documents from a knowledge base.

- Generation: The model generates an output based on the retrieved documents, influenced by the hypothetical document.

HyDe is especially useful for research and development, where queries may be vague, and retrieving data based on ideal or hypothetical responses helps refine complex answers. It also applies to creative content generation when more flexible, imaginative outputs are needed.

Adaptive RAG

Adaptive RAG dynamically adjusts its retrieval strategy based on the complexity or nature of the query. For simple queries, it might retrieve documents from a single source, while for more complex queries, it may access multiple data sources or employ sophisticated retrieval techniques.

Workflow:

- Query Input: The user submits a prompt.

- Adaptive Retrieval: Based on the query's complexity, the model decides whether to retrieve documents from one or multiple sources, or to adjust the retrieval method.

- Generation: The model processes the retrieved information and generates a tailored response, optimizing the retrieval process for each specific query.

Adaptive RAG is useful for enterprise search systems, where the nature of the queries can vary significantly. It ensures both simple and complex queries are handled efficiently, providing the best balance of speed and depth.

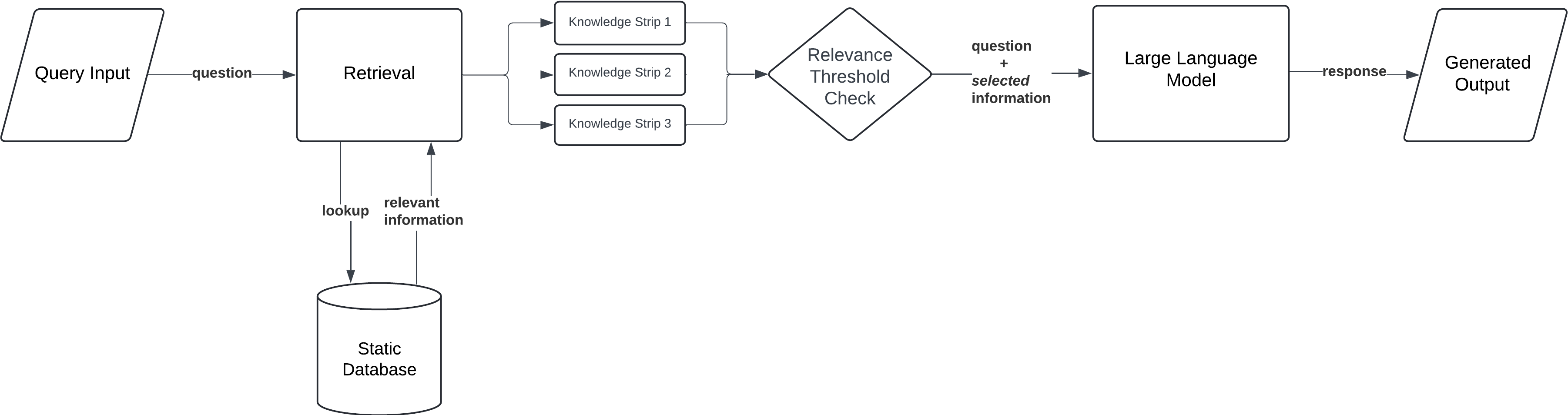

Corrective RAG (CRAG)

This pattern introduces a self-reflection mechanism, evaluating retrieved documents to improve response accuracy. For instance, a retrieved document might be split into "knowledge strips" such as key paragraphs or sentences. Each strip is then scored for relevance on a scale (e.g., 1 to 5), with highly relevant strips prioritized for response generation.

Workflow:

- Query Input: The user submits a query or prompt.

- Document Retrieval: The model retrieves documents from the knowledge base and evaluates their relevance.

- Knowledge Stripping and Grading: The retrieved documents are broken down into "knowledge strips" — smaller sections of information. Each strip is graded based on relevance.

- Knowledge Refinement: Irrelevant strips are filtered out. If no strip meets the relevance threshold, the model seeks additional information.

- Generation: Once a satisfactory set of knowledge strips is obtained, the model generates a final response based on the most relevant and accurate information.

Corrective RAG is ideal for applications requiring high factual accuracy, where even minor inaccuracies can lead to significant consequences.

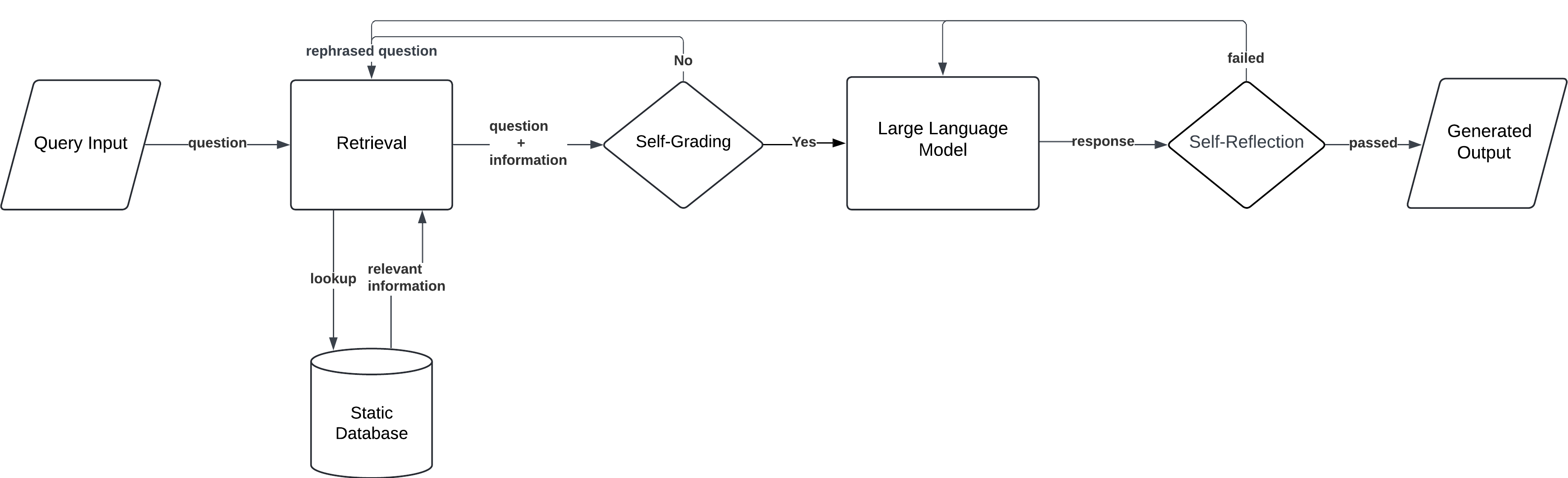

Self-RAG

Self-RAG autonomously generates retrieval queries during the generation process. For example, in an exploratory research scenario, a researcher might ask about "recent trends in renewable energy storage." Initially, the model retrieves documents on general energy storage. As gaps are identified—such as a lack of information on specific materials or recent breakthroughs—the model generates targeted queries like "advances in lithium-sulfur batteries" or "policy impacts on renewable storage in 2023," iteratively refining the response with more precise and relevant details.

Workflow:

- Query Input: The user submits a prompt.

- Initial Retrieval: The model retrieves documents based on the user’s query.

- Self-Retrieval Loop: During the generation process, the model identifies gaps in the information and issues new retrieval queries to find additional data.

- Generation: The model generates a final response, iteratively improving it by retrieving further documents as needed.

Self-RAG is highly effective in exploratory research or long-form content creation, where the model needs to pull in more information dynamically as the response evolves.

Agentic RAG

This implementation provides agent-like behavior, where the model proactively interacts with multiple data sources or APIs to gather information. It uses a Meta-Agent to manage interactions between individual Document Agents, enabling sophisticated decision-making for complex tasks.

Workflow:

- Query Input: The user submits a complex query or task.

- Agent Activation: The model activates multiple agents. Each Document Agent is responsible for a specific document.

- Multi-step Retrieval: The Meta-Agent manages and coordinates the interactions between the various Document Agents.

- Synthesis and Generation: The Meta-Agent integrates the outputs from the individual Document Agents and generates a comprehensive response.

Agentic RAG is perfect for tasks like automated research, multi-source data aggregation, or executive decision support, where the model needs to autonomously pull together and synthesise information from various systems.

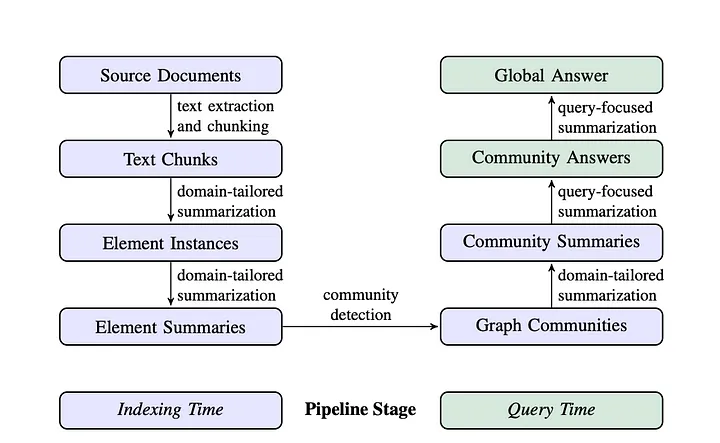

Graph RAG

A baseline RAG usually integrates a vector database and an LLM, where the vector database stores and retrieves contextual information for user queries, and the LLM generates answers based on the retrieved context. While this approach works well in many cases, it struggles with complex tasks like multi-hop reasoning or answering questions that require connecting disparate pieces of information.

For example, consider this question: “What name was given to the son of the man who defeated the usurper Allectus?”

- Identify the Man: Determine who defeated Allectus.

- Research the Man’s Son: Look up information about this person’s family, specifically his son.

- Find the Name: Identify the name of the son.

The challenge usually arises at the first step because a baseline RAG retrieves text based on semantic similarity, not directly answering complex queries where specific details may not be explicitly mentioned in the dataset. To address such challenges, Microsoft Research introduced GraphRAG, a brand-new method that augments RAG retrieval and generation with knowledge graphs.

Graph RAG incorporates graph-based data structures into the retrieval process, allowing the model to retrieve and organize information based on entity relationships. This approach is particularly useful in contexts where the data structure is crucial for understanding, such as knowledge graphs, social networks, or semantic web applications.

Workflow:

- Query Input: The user submits a query.

- Graph Navigation: The model traverses the graph to retrieve both isolated information and the relationships between entities.

- Generation: The model synthesizes a response that reflects both the retrieved data and its relational context.

Graph RAG excels in domains requiring deep relational understanding. Ensuring that graph structures are updated and maintained accurately, as outdated or incomplete graphs could lead to incorrect or incomplete responses.

Sources:

RAG Architectures - Humanloop Blog,

From Local to Global: A Graph RAG Approach to Query-Focused

Summarization

4. Fine-tuning of LLMs

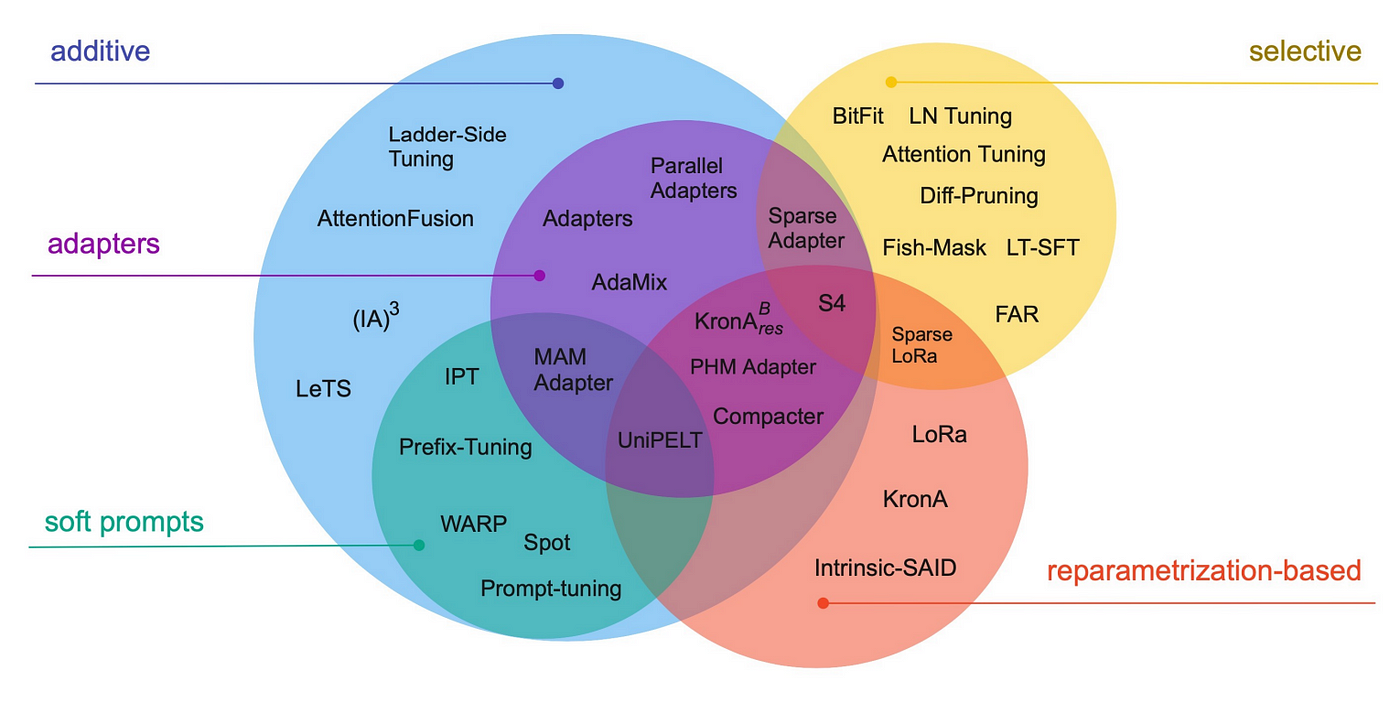

4.1 What is fine-tuning, and why is it needed?▼

Fine-tuning, in the context of machine learning, refers to the process of adapting a pre-trained model to perform specific tasks or meet particular needs. This technique, which falls under the domain of transfer learning, is a fundamental tool for training foundational models used in generative AI and other deep learning applications.

Training a machine learning model from scratch can be extremely resource-intensive and time-consuming, especially for models with millions or billions of parameters. Fine-tuning addresses this challenge by using the knowledge already acquired by a pre-trained model. Starting from a model with a general knowledge base, the adaptation process requires significantly less computational power and fewer labeled data compared to training a new model entirely from scratch.

Although fine-tuning is part of the broader training process, it is distinct from what is traditionally referred to as "training." For clarity, the initial training phase of a model is commonly termed pre-training. At the beginning of pre-training, a model starts with randomly initialized parameters, including weights and biases. The training process iteratively adjusts these parameters in two phases: Forward Pass and Backpropagation. In contrast, LLMs typically employ self-supervised learning (SSL) for pre-training. SSL uses unlabeled data and pretext tasks designed to derive ground truth from the data's inherent structure.

Fine-tuning is versatile and applicable across many fields:

- Natural Language Processing (NLP): Adjusting the conversational tone or enhancing the domain-specific knowledge of LLMs.

- Computer Vision: Adapting pre-trained convolutional neural networks (CNNs) or Vision Transformers (ViTs) for tasks such as image segmentation, object detection, or domain-specific classification.

- Generative Models: Modifying the style or content-generation capabilities of pre-trained models to meet specific requirements.

- Specialized Domains: Incorporating proprietary or domain-specific datasets into models.

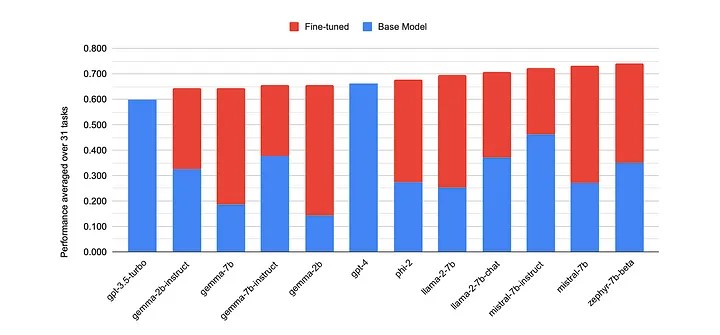

4.2 Which scenario do we need to fine-tune LLM?▼

Fine-tuning is necessary in the following scenarios to adapt large language models (LLMs) to specific requirements or use cases:

1. Tone, Style, and Format Customization

Fine-tuning is beneficial for tailoring an LLM to adhere to a particular persona, tone, or style that resonates with a specific audience. It is also helpful for structuring output in a preferred format like JSON, YAML, or Markdown. Custom datasets enable the LLM to generate responses that closely align with the intended user experience or expectations.

2. Increasing Accuracy and Handling Edge Cases

LLMs may struggle with certain challenges, such as hallucinations, subtle errors, or complex instructions that are not adequately addressed by prompt engineering or in-context learning. Fine-tuning can significantly improve accuracy in specific tasks, such as sentiment analysis, by leveraging a relatively small set of examples:

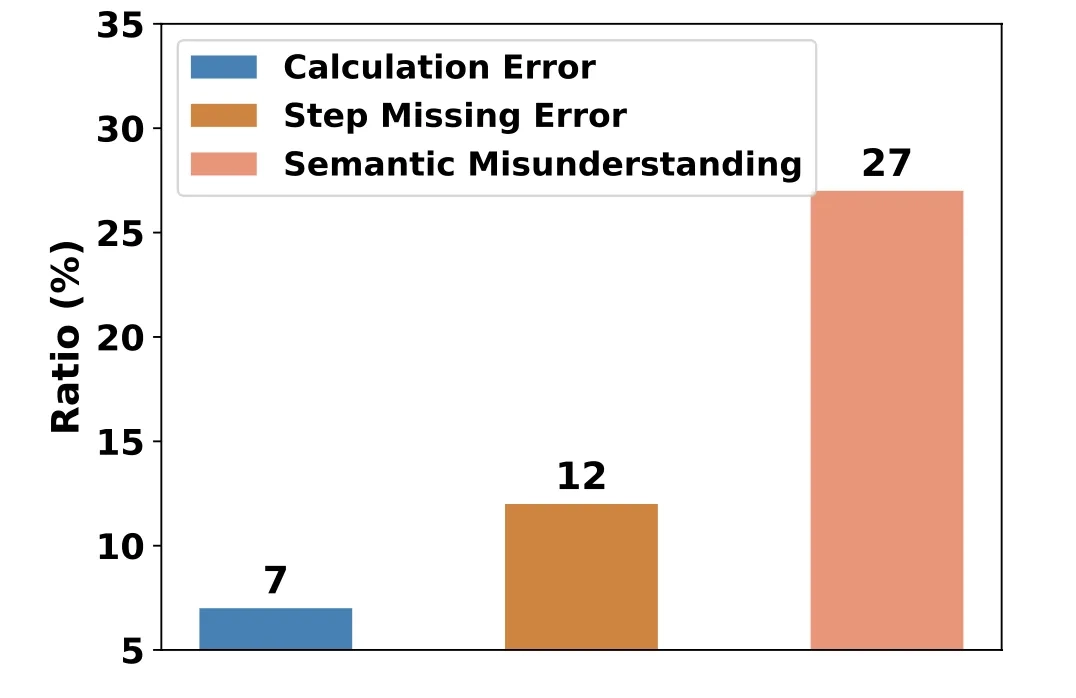

- For example, Phi-2's accuracy on financial data sentiment analysis increased from 34% to 85%.

- ChatGPT's Reddit comment sentiment analysis accuracy improved from 48% to 73% with just 100 examples. Fine-tuning is particularly effective for cases where baseline accuracy is under 50%, often yielding significant improvements with only a few hundred data samples.

3. Addressing Underrepresented Domains

LLMs are often trained on general data and might lack proficiency in specific or niche domains such as medical, legal, or financial sectors. Fine-tuning helps models to:

- Adapt to domain-specific jargon and terminology.

- Perform tasks like summarizing sensitive medical histories or handling data in underrepresented languages.

- Example: Fine-tuning with Parameter Efficient Fine-Tuning (PEFT) techniques improved performance across all tasks in Indic languages.

4. Cost Reduction

Fine-tuning can optimize a smaller model by transferring knowledge from a larger model, like GPT-4 distilled into GPT-3.5 or Llama 2 70B compressed into Llama 2 7B. This process reduces computational costs and latency while maintaining high quality. Fine-tuning also minimizes the need for detailed prompt engineering, leading to token savings and reduced operating costs.

5. Enabling New Tasks or Abilities

Fine-tuning can enable capabilities beyond the pretrained functionality of an LLM. For example:

- Utilizing or ignoring the context retrieved by external systems.

- Enhancing evaluation metrics such as compliance, helpfulness, or groundedness when fine-tuned as an LLM judge.

- Extending the model's context window to handle larger inputs efficiently.

Comparison with In-Context Learning (ICL) and Retrieval-Augmented Generation (RAG)

In-context learning is a simpler alternative that involves providing examples within the prompt to guide the LLM's response. While effective, it has limitations:

- As the number of examples increases, inference cost and latency grow.

- With too many examples, LLMs may ignore some, necessitating systems like RAG to retrieve the most relevant examples.

- LLMs may reproduce the knowledge from examples verbatim, a concern shared with fine-tuning. ICL serves as a valuable preliminary approach to assess whether fine-tuning could enhance performance on downstream tasks.

RAG systems incorporate external knowledge retrieval to supplement LLMs, making them suitable for dynamic data or knowledge-intensive tasks. Key considerations include:

- External Knowledge: RAG excels in injecting external information dynamically, whereas fine-tuning embeds static knowledge.

- Custom Requirements: Fine-tuning is ideal for aligning tone, behavior, or vocabulary.

- Hallucination Mitigation: RAG provides built-in mechanisms to reduce hallucinations, making it preferable in applications requiring high factual accuracy.

- Adaptability: Fine-tuning struggles with rapidly changing data, while RAG leverages live data sources.

- Interpretability: RAG inherently offers transparency with references, aiding in interpretability.

- Cost and Expertise: Building RAG systems may demand expertise in search systems, while fine-tuning necessitates robust data gathering and improvement strategies.

Often, combining fine-tuning and RAG yields the best results. Fine-tuning customizes the model's behavior, while RAG supplements it with dynamic, up-to-date knowledge. Cost, complexity, and additional benefits should guide the decision to adopt either or both approaches, with experimentation and error analysis providing critical insights for optimization.

Source: https://ai.meta.com/blog/when-to-fine-tune-llms-vs-other-techniques/

4.3 How to decide if fine-tuning is necessary?▼

Fine-tuning adapts LLMs to specific tasks or domains, often yielding significant performance gains. However, it's not always necessary or beneficial. Understanding when fine-tuning is required is critical for optimizing project success and resource allocation. Here's how to approach the decision:

Task Suitability

Fine-tuning is most effective for tasks requiring specialized domain knowledge. It also helps tailor models for highly specific tasks like structured data extraction, content moderation, or generating consistent brand-aligned content.

General-purpose models like GPT-4 and Llama 3 offer strong baseline performance. However, they can fall short for domain-specific tasks (e.g., clinical note analysis), specialized skills (e.g., advanced mathematics), or language-specific nuances (e.g., low-resource dialects).

Data Considerations

High-quality, task-specific data is essential for effective fine-tuning. For continual pre-training, terabytes of text data may be required, especially when introducing new domain-specific knowledge. Supervised fine-tuning typically needs fewer examples, ranging from hundreds for simple tasks to thousands for complex ones. Regardless of the approach, ensure data relevance, cleanliness, and proper licensing, and address privacy concerns where applicable.

Resource and Expertise Requirements

Fine-tuning can be resource-intensive, necessitating:

- Hardware: High-performance GPUs or TPUs.

- Financial investment: Costs for hosting a fine-tuned model may outweigh the benefits for low-volume applications.

- Expertise: Domain knowledge for prompt crafting, machine learning for evaluation metrics, and engineering for inference optimization in real-time applications.

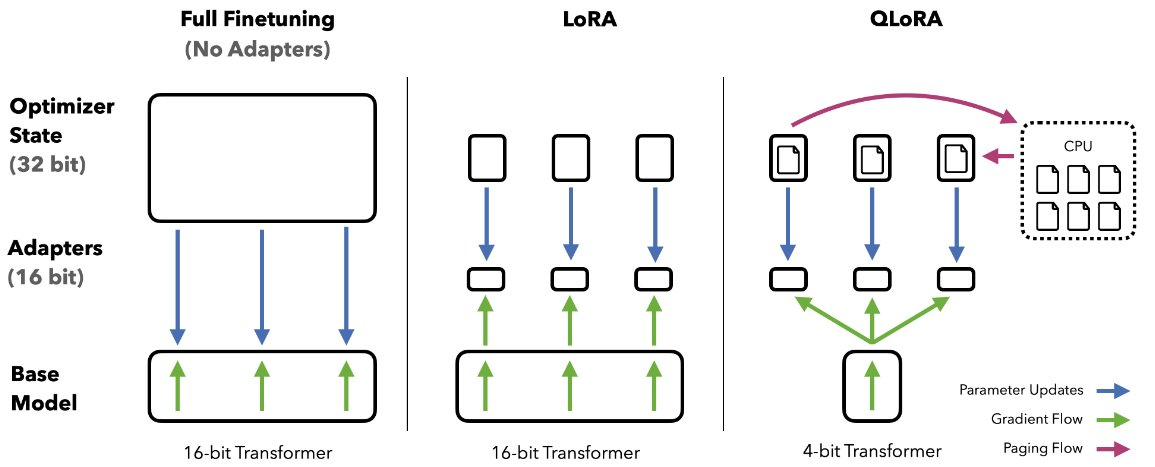

Recent innovations in fine-tuning techniques have made working with large models more accessible. A key example is QLoRA (Quantized Low-Rank Adaptation), which significantly reduces memory requirements compared to traditional fine-tuning approaches. To illustrate this, consider the peak GPU memory usage when fine-tuning Llama 2 7B using different methods:

| Fine-tuning Method | Peak GPU Memory (GB) |

|---|---|

| Full fine-tuning | 24.1 |

| LoRA | 21.3 |

| QLoRA | 12.3 |

Note: QLoRA achieves these results using 4-bit NormalFloat quantization.

Task Complexity and Specificity

Fine-tuning offers the most value for tasks with narrow, well-defined scopes. For example, sentiment analysis for a specific product category benefits more from fine-tuning than general text classification. Metrics like input/output lengths, data compressibility, and content diversity can help assess task complexity.